%load_ext autoreload

%autoreload 2

Food Vision Big¶

Milestone Project 1¶

In the previous notebook (transfer learning part 3: scaling up), we built the Food Vision Mini: a transfer learning model which beat the original results of the Food101 paper with only 10% of the data. In this notebook, we are going to use all of the data of the Food101 dataset.

- Train: 75,750

- Test: 25,250

- Number of classes: 101

Goal: Beat the DeepFood, a 2016 paper which used a CNN trained for 2-3 days to acheive 77.4% top-1 accuracy.

Note: top-1 accuracy means "accuracy for the top softmax activation value output for the model". Hence top-5 accuracy would just mean, if the actual class lies inside the top-5 activations, we consider it to be correct. top-1 accuracy will always be lower than top-5

Idea: Just like top-1, and top-n accuracy where we evaluate the prediction as correct if the true label lies in the top-n activations, can we define another performance metric, where we calculate the average n of the position of the correct label in the softmax probabilities. Lower the n, better is the model's performance.

ADD TABLE FOR Food-Vision-Big vs Food-Vision-Mini Comparison

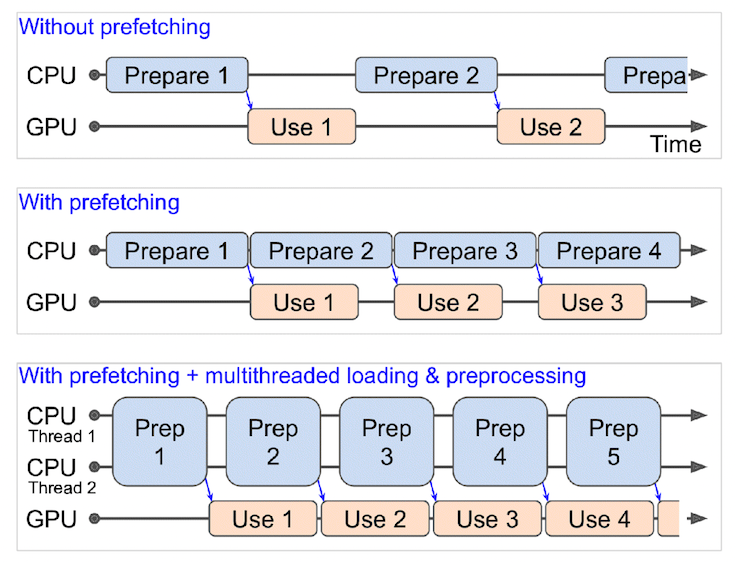

Alongside attempting to beat the DeepFood paper, we're going to learn about two methods to significantly improve the spped our model training:

- Prefetching

- Mixed precision training

Contents of the Notebook:

- [ ] Using Tensorflow datasets to download and explore the data as opposed to downloading from elsewhere and then loading. (Is there a way to dump the dataset somewhere, if the dataset is large?)

- [ ] Create preprocessing functions for our data

- [ ] Batching and preparing datasets (datasets are prefetched and preprocessed faster)

- [ ] Create modelling callbacks

- [ ] Setting up mixed precision training (requires compute capability > 7.0)

- [ ] Build a feature extraction model (transfer learning)

- [ ] Fine tune our feature extraction model

- [ ] Viewing the training results on Tensorboard

!pip install tensorflow==2.4

Collecting tensorflow==2.4

Downloading https://files.pythonhosted.org/packages/94/0a/012cc33c643d844433d13001dd1db179e7020b05ddbbd0a9dc86c38a8efa/tensorflow-2.4.0-cp37-cp37m-manylinux2010_x86_64.whl (394.7MB)

|████████████████████████████████| 394.7MB 45kB/s

Collecting tensorflow-estimator<2.5.0,>=2.4.0rc0

Downloading https://files.pythonhosted.org/packages/74/7e/622d9849abf3afb81e482ffc170758742e392ee129ce1540611199a59237/tensorflow_estimator-2.4.0-py2.py3-none-any.whl (462kB)

|████████████████████████████████| 471kB 34.5MB/s

Collecting h5py~=2.10.0

Downloading https://files.pythonhosted.org/packages/3f/c0/abde58b837e066bca19a3f7332d9d0493521d7dd6b48248451a9e3fe2214/h5py-2.10.0-cp37-cp37m-manylinux1_x86_64.whl (2.9MB)

|████████████████████████████████| 2.9MB 43.6MB/s

Requirement already satisfied: typing-extensions~=3.7.4 in /usr/local/lib/python3.7/dist-packages (from tensorflow==2.4) (3.7.4.3)

Requirement already satisfied: astunparse~=1.6.3 in /usr/local/lib/python3.7/dist-packages (from tensorflow==2.4) (1.6.3)

Requirement already satisfied: protobuf>=3.9.2 in /usr/local/lib/python3.7/dist-packages (from tensorflow==2.4) (3.12.4)

Requirement already satisfied: flatbuffers~=1.12.0 in /usr/local/lib/python3.7/dist-packages (from tensorflow==2.4) (1.12)

Requirement already satisfied: keras-preprocessing~=1.1.2 in /usr/local/lib/python3.7/dist-packages (from tensorflow==2.4) (1.1.2)

Requirement already satisfied: tensorboard~=2.4 in /usr/local/lib/python3.7/dist-packages (from tensorflow==2.4) (2.5.0)

Requirement already satisfied: six~=1.15.0 in /usr/local/lib/python3.7/dist-packages (from tensorflow==2.4) (1.15.0)

Requirement already satisfied: wheel~=0.35 in /usr/local/lib/python3.7/dist-packages (from tensorflow==2.4) (0.36.2)

Collecting gast==0.3.3

Downloading https://files.pythonhosted.org/packages/d6/84/759f5dd23fec8ba71952d97bcc7e2c9d7d63bdc582421f3cd4be845f0c98/gast-0.3.3-py2.py3-none-any.whl

Requirement already satisfied: numpy~=1.19.2 in /usr/local/lib/python3.7/dist-packages (from tensorflow==2.4) (1.19.5)

Requirement already satisfied: termcolor~=1.1.0 in /usr/local/lib/python3.7/dist-packages (from tensorflow==2.4) (1.1.0)

Requirement already satisfied: opt-einsum~=3.3.0 in /usr/local/lib/python3.7/dist-packages (from tensorflow==2.4) (3.3.0)

Requirement already satisfied: wrapt~=1.12.1 in /usr/local/lib/python3.7/dist-packages (from tensorflow==2.4) (1.12.1)

Collecting grpcio~=1.32.0

Downloading https://files.pythonhosted.org/packages/06/54/1c8be62beafe7fb1548d2968e518ca040556b46b0275399d4f3186c56d79/grpcio-1.32.0-cp37-cp37m-manylinux2014_x86_64.whl (3.8MB)

|████████████████████████████████| 3.8MB 38.2MB/s

Requirement already satisfied: google-pasta~=0.2 in /usr/local/lib/python3.7/dist-packages (from tensorflow==2.4) (0.2.0)

Requirement already satisfied: absl-py~=0.10 in /usr/local/lib/python3.7/dist-packages (from tensorflow==2.4) (0.12.0)

Requirement already satisfied: setuptools in /usr/local/lib/python3.7/dist-packages (from protobuf>=3.9.2->tensorflow==2.4) (57.0.0)

Requirement already satisfied: markdown>=2.6.8 in /usr/local/lib/python3.7/dist-packages (from tensorboard~=2.4->tensorflow==2.4) (3.3.4)

Requirement already satisfied: tensorboard-plugin-wit>=1.6.0 in /usr/local/lib/python3.7/dist-packages (from tensorboard~=2.4->tensorflow==2.4) (1.8.0)

Requirement already satisfied: google-auth-oauthlib<0.5,>=0.4.1 in /usr/local/lib/python3.7/dist-packages (from tensorboard~=2.4->tensorflow==2.4) (0.4.4)

Requirement already satisfied: werkzeug>=0.11.15 in /usr/local/lib/python3.7/dist-packages (from tensorboard~=2.4->tensorflow==2.4) (1.0.1)

Requirement already satisfied: google-auth<2,>=1.6.3 in /usr/local/lib/python3.7/dist-packages (from tensorboard~=2.4->tensorflow==2.4) (1.31.0)

Requirement already satisfied: requests<3,>=2.21.0 in /usr/local/lib/python3.7/dist-packages (from tensorboard~=2.4->tensorflow==2.4) (2.23.0)

Requirement already satisfied: tensorboard-data-server<0.7.0,>=0.6.0 in /usr/local/lib/python3.7/dist-packages (from tensorboard~=2.4->tensorflow==2.4) (0.6.1)

Requirement already satisfied: importlib-metadata; python_version < "3.8" in /usr/local/lib/python3.7/dist-packages (from markdown>=2.6.8->tensorboard~=2.4->tensorflow==2.4) (4.5.0)

Requirement already satisfied: requests-oauthlib>=0.7.0 in /usr/local/lib/python3.7/dist-packages (from google-auth-oauthlib<0.5,>=0.4.1->tensorboard~=2.4->tensorflow==2.4) (1.3.0)

Requirement already satisfied: rsa<5,>=3.1.4; python_version >= "3.6" in /usr/local/lib/python3.7/dist-packages (from google-auth<2,>=1.6.3->tensorboard~=2.4->tensorflow==2.4) (4.7.2)

Requirement already satisfied: cachetools<5.0,>=2.0.0 in /usr/local/lib/python3.7/dist-packages (from google-auth<2,>=1.6.3->tensorboard~=2.4->tensorflow==2.4) (4.2.2)

Requirement already satisfied: pyasn1-modules>=0.2.1 in /usr/local/lib/python3.7/dist-packages (from google-auth<2,>=1.6.3->tensorboard~=2.4->tensorflow==2.4) (0.2.8)

Requirement already satisfied: chardet<4,>=3.0.2 in /usr/local/lib/python3.7/dist-packages (from requests<3,>=2.21.0->tensorboard~=2.4->tensorflow==2.4) (3.0.4)

Requirement already satisfied: idna<3,>=2.5 in /usr/local/lib/python3.7/dist-packages (from requests<3,>=2.21.0->tensorboard~=2.4->tensorflow==2.4) (2.10)

Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.7/dist-packages (from requests<3,>=2.21.0->tensorboard~=2.4->tensorflow==2.4) (2021.5.30)

Requirement already satisfied: urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1 in /usr/local/lib/python3.7/dist-packages (from requests<3,>=2.21.0->tensorboard~=2.4->tensorflow==2.4) (1.24.3)

Requirement already satisfied: zipp>=0.5 in /usr/local/lib/python3.7/dist-packages (from importlib-metadata; python_version < "3.8"->markdown>=2.6.8->tensorboard~=2.4->tensorflow==2.4) (3.4.1)

Requirement already satisfied: oauthlib>=3.0.0 in /usr/local/lib/python3.7/dist-packages (from requests-oauthlib>=0.7.0->google-auth-oauthlib<0.5,>=0.4.1->tensorboard~=2.4->tensorflow==2.4) (3.1.1)

Requirement already satisfied: pyasn1>=0.1.3 in /usr/local/lib/python3.7/dist-packages (from rsa<5,>=3.1.4; python_version >= "3.6"->google-auth<2,>=1.6.3->tensorboard~=2.4->tensorflow==2.4) (0.4.8)

Installing collected packages: tensorflow-estimator, h5py, gast, grpcio, tensorflow

Found existing installation: tensorflow-estimator 2.5.0

Uninstalling tensorflow-estimator-2.5.0:

Successfully uninstalled tensorflow-estimator-2.5.0

Found existing installation: h5py 3.1.0

Uninstalling h5py-3.1.0:

Successfully uninstalled h5py-3.1.0

Found existing installation: gast 0.4.0

Uninstalling gast-0.4.0:

Successfully uninstalled gast-0.4.0

Found existing installation: grpcio 1.34.1

Uninstalling grpcio-1.34.1:

Successfully uninstalled grpcio-1.34.1

Found existing installation: tensorflow 2.5.0

Uninstalling tensorflow-2.5.0:

Successfully uninstalled tensorflow-2.5.0

Successfully installed gast-0.3.3 grpcio-1.32.0 h5py-2.10.0 tensorflow-2.4.0 tensorflow-estimator-2.4.0

Check the GPU¶

Mixed precision training was introduced in Tensorflow 2.4.0.

- It uses a combination of single precision (float32) and half-precision (float16) data types to speed up the training (upto 3x on modern GPUs)

- Tensorflow documentation on mixed precision

- A GPU with compute capability score of 7.0+ is required

- On Google Colab: P100 (not compatible), K80 (not compatible) and T4 GPUs (compatible).

- Nvidia GPU compute capabilities

Note: if you run the cell below and see a P100 or K80, go to Runtime -> Factory Reset Runtime and retry to get a T4.

!nvidia-smi -L

GPU 0: Tesla V100-SXM2-16GB (UUID: GPU-b8b30eb1-aa56-e51a-09fd-e3b30c56f81c)

# Check Tensorflow version

import tensorflow as tf

print(tf.__version__)

2.4.0

Change working directory to project (Google Drive)¶

import os

os.chdir('/content/drive/MyDrive/projects/Tensorflow-tutorial-Daniel-Bourke/notebooks/')

os.listdir()

['07-Milestone-Project-1-Food-Vision-Big.ipynb', '.ipynb_checkpoints', '03B-10-Food-Multiclass-Classification.ipynb', '04-Transfer-Learning-Part1-Feature-Extraction.ipynb', '01A-tensorflow-neural-network-regression.ipynb', '01B-tensorflow-neural-network-regression.ipynb', '00-tensorflow-fundamentals.ipynb', '05-Transfer-Learning-Part2-Fine-Tuning.ipynb', 'Z-Check-Imports.ipynb', '02B-Fashion-MNIST-larger-example.ipynb', '03A-Pizza-Steak-Image-Classification.ipynb', '01C-tensorflow-neural-network-regression.ipynb', '06-Transfer-Learning-Part3-Food-Vision-Mini.ipynb', '02A-tensorflow-playground-reimplementation.ipynb', '08-NLP-Basics-in-Tensorflow-Disaster-Tweets.ipynb']

Use Tensorflow Datasets to Download Data¶

In the previous notebooks, we used google storage to download the Food101 dataset. This is typically the case, that we fetch the datafiles and keep it on some kind of storage (if large, then on the cloud)

- Then we connect, and fetch from that storage

However, there is another way to get datasets ready to use with Tensorflow. Many popular machine learning datasets (often used for benchmarking), can be accessed through Tensorflow Datasets (TFDS)

What is Tensorflow Datasets:

Pros:

- Load data already in tensors

- Practice on well established datasets

- Experiment with different data loading techniques

Cons:

- Datasets are static (real world datasets change)

- Might not be suited for our particular problem

import tensorflow_datasets as tfds

datasets_list = tfds.list_builders()

datasets_list

['abstract_reasoning', 'accentdb', 'aeslc', 'aflw2k3d', 'ag_news_subset', 'ai2_arc', 'ai2_arc_with_ir', 'amazon_us_reviews', 'anli', 'arc', 'bair_robot_pushing_small', 'bccd', 'beans', 'big_patent', 'bigearthnet', 'billsum', 'binarized_mnist', 'binary_alpha_digits', 'blimp', 'bool_q', 'c4', 'caltech101', 'caltech_birds2010', 'caltech_birds2011', 'cars196', 'cassava', 'cats_vs_dogs', 'celeb_a', 'celeb_a_hq', 'cfq', 'chexpert', 'cifar10', 'cifar100', 'cifar10_1', 'cifar10_corrupted', 'citrus_leaves', 'cityscapes', 'civil_comments', 'clevr', 'clic', 'clinc_oos', 'cmaterdb', 'cnn_dailymail', 'coco', 'coco_captions', 'coil100', 'colorectal_histology', 'colorectal_histology_large', 'common_voice', 'coqa', 'cos_e', 'cosmos_qa', 'covid19sum', 'crema_d', 'curated_breast_imaging_ddsm', 'cycle_gan', 'deep_weeds', 'definite_pronoun_resolution', 'dementiabank', 'diabetic_retinopathy_detection', 'div2k', 'dmlab', 'downsampled_imagenet', 'dsprites', 'dtd', 'duke_ultrasound', 'emnist', 'eraser_multi_rc', 'esnli', 'eurosat', 'fashion_mnist', 'flic', 'flores', 'food101', 'forest_fires', 'fuss', 'gap', 'geirhos_conflict_stimuli', 'genomics_ood', 'german_credit_numeric', 'gigaword', 'glue', 'goemotions', 'gpt3', 'groove', 'gtzan', 'gtzan_music_speech', 'hellaswag', 'higgs', 'horses_or_humans', 'i_naturalist2017', 'imagenet2012', 'imagenet2012_corrupted', 'imagenet2012_real', 'imagenet2012_subset', 'imagenet_a', 'imagenet_r', 'imagenet_resized', 'imagenet_v2', 'imagenette', 'imagewang', 'imdb_reviews', 'irc_disentanglement', 'iris', 'kitti', 'kmnist', 'lfw', 'librispeech', 'librispeech_lm', 'libritts', 'ljspeech', 'lm1b', 'lost_and_found', 'lsun', 'malaria', 'math_dataset', 'mctaco', 'mnist', 'mnist_corrupted', 'movie_lens', 'movie_rationales', 'movielens', 'moving_mnist', 'multi_news', 'multi_nli', 'multi_nli_mismatch', 'natural_questions', 'natural_questions_open', 'newsroom', 'nsynth', 'nyu_depth_v2', 'omniglot', 'open_images_challenge2019_detection', 'open_images_v4', 'openbookqa', 'opinion_abstracts', 'opinosis', 'opus', 'oxford_flowers102', 'oxford_iiit_pet', 'para_crawl', 'patch_camelyon', 'paws_wiki', 'paws_x_wiki', 'pet_finder', 'pg19', 'places365_small', 'plant_leaves', 'plant_village', 'plantae_k', 'qa4mre', 'qasc', 'quickdraw_bitmap', 'radon', 'reddit', 'reddit_disentanglement', 'reddit_tifu', 'resisc45', 'robonet', 'rock_paper_scissors', 'rock_you', 'salient_span_wikipedia', 'samsum', 'savee', 'scan', 'scene_parse150', 'scicite', 'scientific_papers', 'sentiment140', 'shapes3d', 'smallnorb', 'snli', 'so2sat', 'speech_commands', 'spoken_digit', 'squad', 'stanford_dogs', 'stanford_online_products', 'starcraft_video', 'stl10', 'sun397', 'super_glue', 'svhn_cropped', 'ted_hrlr_translate', 'ted_multi_translate', 'tedlium', 'tf_flowers', 'the300w_lp', 'tiny_shakespeare', 'titanic', 'trec', 'trivia_qa', 'tydi_qa', 'uc_merced', 'ucf101', 'vctk', 'vgg_face2', 'visual_domain_decathlon', 'voc', 'voxceleb', 'voxforge', 'waymo_open_dataset', 'web_questions', 'wider_face', 'wiki40b', 'wikihow', 'wikipedia', 'wikipedia_toxicity_subtypes', 'wine_quality', 'winogrande', 'wmt14_translate', 'wmt15_translate', 'wmt16_translate', 'wmt17_translate', 'wmt18_translate', 'wmt19_translate', 'wmt_t2t_translate', 'wmt_translate', 'wordnet', 'xnli', 'xquad', 'xsum', 'yelp_polarity_reviews', 'yes_no']

print('food101' in datasets_list)

True

This means the food101 dataset is available!

(train_data, test_data), ds_info = tfds.load(name='food101',

split=['train', 'validation'],

shuffle_files=True,

as_supervised=True, # download data in tuple format (sample, label)

with_info=True)

ds_info

tfds.core.DatasetInfo(

name='food101',

version=2.0.0,

description='This dataset consists of 101 food categories, with 101'000 images. For each class, 250 manually reviewed test images are provided as well as 750 training images. On purpose, the training images were not cleaned, and thus still contain some amount of noise. This comes mostly in the form of intense colors and sometimes wrong labels. All images were rescaled to have a maximum side length of 512 pixels.',

homepage='https://www.vision.ee.ethz.ch/datasets_extra/food-101/',

features=FeaturesDict({

'image': Image(shape=(None, None, 3), dtype=tf.uint8),

'label': ClassLabel(shape=(), dtype=tf.int64, num_classes=101),

}),

total_num_examples=101000,

splits={

'train': 75750,

'validation': 25250,

},

supervised_keys=('image', 'label'),

citation="""@inproceedings{bossard14,

title = {Food-101 -- Mining Discriminative Components with Random Forests},

author = {Bossard, Lukas and Guillaumin, Matthieu and Van Gool, Luc},

booktitle = {European Conference on Computer Vision},

year = {2014}

}""",

redistribution_info=,

)

ds_info.features

FeaturesDict({

'image': Image(shape=(None, None, 3), dtype=tf.uint8),

'label': ClassLabel(shape=(), dtype=tf.int64, num_classes=101),

})

# Get the class names

CLASS_NAMES = ds_info.features['label'].names

CLASS_NAMES[:10]

['apple_pie', 'baby_back_ribs', 'baklava', 'beef_carpaccio', 'beef_tartare', 'beet_salad', 'beignets', 'bibimbap', 'bread_pudding', 'breakfast_burrito']

Exploring the Food101 data from Tensorflow Datasets¶

Let us explore the following things in our data:

- The shape of our input data (image tensors)

- Datatype of the input data

- How do the labels look like (whether integers or one-hot)

# Take one sample off the training data

train_one_sample = train_data.take(1) # samples are in the format (image_tensor, label)

Because we used as_supervised=True in tfds.load(), the data samples come in the tuple format structure (data, label) i.e. (image_tensor, label)

# Let's see how our sample data looks like

train_one_sample

<TakeDataset shapes: ((None, None, 3), ()), types: (tf.uint8, tf.int64)>

Let's "loop through" our single training sample and get some info from the image_tensor and label

# Output info about our training sample

for image, label in train_one_sample:

print(f'''

Image shape: {image.shape}

Image dtype: {image.dtype}

Target class: {label}

Class name: {CLASS_NAMES[label.numpy()]}

''')

Image shape: (384, 512, 3)

Image dtype: <dtype: 'uint8'>

Target class: 97

Class name: takoyaki

Because we set shuffle_files=True parameter in our tfds.load(), running above cell will result in a different result everytime. The dimensions of the images may also be different, sometimes appearing to (512, 512, 3) and sometimes (512, 342, 3)

# Let's check out the image tensor

image

<tf.Tensor: shape=(384, 512, 3), dtype=uint8, numpy=

array([[[ 54, 30, 26],

[ 57, 33, 29],

[ 72, 49, 43],

...,

[192, 184, 163],

[190, 179, 159],

[181, 169, 147]],

[[ 51, 28, 22],

[ 60, 37, 31],

[ 81, 58, 52],

...,

[191, 183, 162],

[189, 176, 157],

[176, 164, 142]],

[[ 78, 58, 49],

[ 96, 76, 67],

[122, 102, 93],

...,

[191, 183, 162],

[188, 175, 156],

[172, 157, 136]],

...,

[[162, 140, 117],

[164, 142, 119],

[169, 147, 126],

...,

[ 5, 5, 5],

[ 6, 6, 6],

[ 6, 6, 6]],

[[168, 146, 123],

[170, 148, 125],

[176, 154, 131],

...,

[ 5, 5, 5],

[ 6, 6, 6],

[ 6, 6, 6]],

[[173, 151, 128],

[176, 154, 131],

[182, 160, 137],

...,

[ 4, 4, 4],

[ 6, 6, 6],

[ 6, 6, 6]]], dtype=uint8)>

# The min and the max values

tf.reduce_min(image), tf.reduce_max(image)

(<tf.Tensor: shape=(), dtype=uint8, numpy=0>, <tf.Tensor: shape=(), dtype=uint8, numpy=255>)

The minimum and maximum are actually in the range (0, 255) as it is a 8 bit RGB image.

PLot the image¶

import matplotlib.pyplot as plt

plt.imshow(image);

plt.title(CLASS_NAMES[label.numpy()], fontdict=dict(weight='bold', size=20));

plt.axis('off')

(-0.5, 511.5, 383.5, -0.5)

Create preprocessing functions for our data¶

In the previous notebooks, when our images were in the folder we used the method tf.keras.preprocessing.image_dataset_from_directory() to load them in. Doing so, we passed multiple preprocessing arguments which made our data ready to be inputted to the model.

However, since we've downloaded the data from Tensorflow Datasets, there are a few preprocessing steps we have to take before it's ready for the model.

Specifically:

- Our data is currently in

uint8dtype- Convert to

float32datatype

- Convert to

- Comprised of all different sized tensors (different sized images)

- Convert to same sized tensors (batches require all tensors to have the same shape e.g.

(224, 224, 3))

- Convert to same sized tensors (batches require all tensors to have the same shape e.g.

- Not scaled (the pixel values are between 0 & 255)

- We need to scale them to values between (0 & 1) [Normalization]. Each pixel is a feature.

To take care of these, we will create preprocess_img() function which:

- Resizes an input image tensor to a specified size using

tf.image.resize() - Converts an input image tensor's current datatype to

tf.float32usingtf.cast()

Note: Pretrained EfficientNetB{X} models in

tf.keras.applicationshave rescaling built-in. The same models downloaded fromTensorflow Hubbehave differently, probably because they don't have rescaling by default.

# Make a function to preprocess the imag

def preprocess_image(image, label, img_size=224, dtype=tf.float32, scale=False):

image = tf.image.resize(image, size=(img_size, img_size))

if scale:

image = image/255.0

image = tf.cast(image, dtype)

return image, label

- The above

`preprocess_imagefunction takes in an image, and resizes it, converts it into a dtype, and optionally scales it assuming a 8-bit image.

# preprocess a single image

preprocessed_img = preprocess_image(image, label)[0]

print(f'''Image before preprocessing:\n{image}

Shape: {image.shape}

Datatype: {image.dtype}

''')

print('-'*10, '\n')

print(f'''Image after preprocessing:\n{preprocessed_img}

Shape: {preprocessed_img.shape}

Datatype: {preprocessed_img.dtype}

''')

Image before preprocessing: [[[ 54 30 26] [ 57 33 29] [ 72 49 43] ... [192 184 163] [190 179 159] [181 169 147]] [[ 51 28 22] [ 60 37 31] [ 81 58 52] ... [191 183 162] [189 176 157] [176 164 142]] [[ 78 58 49] [ 96 76 67] [122 102 93] ... [191 183 162] [188 175 156] [172 157 136]] ... [[162 140 117] [164 142 119] [169 147 126] ... [ 5 5 5] [ 6 6 6] [ 6 6 6]] [[168 146 123] [170 148 125] [176 154 131] ... [ 5 5 5] [ 6 6 6] [ 6 6 6]] [[173 151 128] [176 154 131] [182 160 137] ... [ 4 4 4] [ 6 6 6] [ 6 6 6]]] Shape: (384, 512, 3) Datatype: <dtype: 'uint8'> ---------- Image after preprocessing: [[[ 56.23469 32.591835 27.877552 ] [ 98.16328 75.16328 68.50001 ] [121.62245 101.62245 91.63265 ] ... [191.72456 191.36736 170.93883 ] [202.18863 196.9743 176.90286 ] [185.918 174.10167 153.6169 ]] [[ 93.47959 73.55102 64.19388 ] [144.57143 124.64286 113.561226 ] [169.66327 150.20409 138.79082 ] ... [196.22955 195.22955 176.80103 ] [202.02025 194.9335 175.87225 ] [182.24942 168.44324 148.72888 ]] [[166.51021 149.08163 133.51021 ] [169.28572 151.85715 136.28572 ] [172.10204 155.67348 140.10204 ] ... [193.92346 191.68872 174.21426 ] [200.37233 191.62741 174.35698 ] [180.43791 164.87663 145.44292 ]] ... [[162.99997 140.99997 119.57139 ] [174.41327 152.41327 131.41327 ] [197.2398 174.66838 156.45409 ] ... [ 5.831621 5.831621 5.831621 ] [ 5.0561323 5.0561323 5.0561323] [ 6. 6. 6. ]] [[163.09698 141.09698 118.096985 ] [177.14291 155.14291 134.14291 ] [197.45918 174.88776 156.67346 ] ... [ 5.214264 5.214264 5.214264 ] [ 4.137751 4.137751 4.137751 ] [ 6. 6. 6. ]] [[172.91324 150.91324 127.91324 ] [187.28569 165.28569 142.28569 ] [200.0102 178.22449 157.65305 ] ... [ 4.7091513 4.7091513 4.7091513] [ 4.025515 4.025515 4.025515 ] [ 6. 6. 6. ]]] Shape: (224, 224, 3) Datatype: <dtype: 'float32'>

How does the image look like after preprocessing?

- We can still plot our image, if we divide by 255. Floats between 0 and 1 are interpreted as RGB intensities

plt.imshow(preprocessed_img/255.);

plt.title(CLASS_NAMES[label.numpy()], fontdict=dict(weight='bold', size=20));

plt.axis('off')

(-0.5, 223.5, 223.5, -0.5)

len(train_data), len(test_data)

(75750, 25250)

Batch and prepare datasets¶

Before we can model our data, we have to turn it into batches. This is because computing on batches is memory efficient, more gradient descent steps, faster training.

Essentially, we have to convert our 75750 (train), and 25250 (test) images into batches of size 32 Friends don't let friends use minibatch sizes larger than 32

To do this in the most efficient way, we will use the

tf.DataAPI. See Better performance with the tf.Data API

Specifically, we're going to be using:

map()- maps a predefined function to a target dataset (e.g. we will mappreprocess_imageto our image tensors). Resizing is the most essential function here, as the images need to be of the same size to be batched later.shuffle()- randomly shuffles elements of the target dataset up to thebuffer_size. Ideally,buffer_sizemust be equal to the size of the dataset, but if the training set is large, the buffer might not fit in the memory. A fairly large number of 1000, or 10000 is usually sufficient for shuffling.batch()- turns elements of the target dataset into batches (size defined bybatch_sizeparameter)prefetch()- prepares subsequent batches of data whilst other batches of data are being computed on (i.e. as the model is training, simultaneously upcoming batches are being prepared)

Things to note:

- For methods with

num_parallel_callsargument, set it totf.data.AUTOTUNE. This will parellelize preprocessing and significantly improve speed. - Can't use

cache()unless the dataset fits in the memory

We are going to do the things in the following order:

Original dataset -> map() -> shuffle() -> batch() -> prefetch() -> PrefetchedDataset

In words this will sound like:

We first map the preprocessing function accross our training dataset, then shuffle a number of elements before batching, and make sure you prepare the new batches whist the model is training through the current batch

Source: Page 422 Hands-On Machine Learning with Scikit-Learn, Keras & TensorFlow Book by Aurélien Géron.

Now let us carry out the above steps on both train and test data

# Map the preprocessing function to training data (and parellelize)

train_data = train_data.map(map_func=preprocess_image, num_parallel_calls=tf.data.AUTOTUNE)

# Shuffle train_data and turn it into batches and prefetch it (prepare beforehand/keep preparing -> load in memory for faster fetching)

train_data = train_data.shuffle(buffer_size=1000).batch(32).prefetch(buffer_size=tf.data.AUTOTUNE)

test_data = test_data.map(map_func=preprocess_image, num_parallel_calls=tf.data.AUTOTUNE)

test_data = test_data.shuffle(buffer_size=1000).batch(32).prefetch(buffer_size=tf.data.AUTOTUNE)

train_data, test_data

(<PrefetchDataset shapes: ((None, 224, 224, 3), (None,)), types: (tf.float32, tf.int64)>, <PrefetchDataset shapes: ((None, 224, 224, 3), (None,)), types: (tf.float32, tf.int64)>)

- Now our data is in tuples of

(image, label)with datatypes of(tf.float32, tf.int64). This is why we added a redundant argumentlabelinto ourpreprocess_imgfunction. - We could have done this without using the

prefetch()argument, however the we would see significantly slower data loading speeds when building a model. So most of the data pipelines should end with a call toprefetch()

Create modelling callbacks¶

Since we're going to be training on a large amount of data and training could take a long amount of time, it's a good idea to setup some modelling callbacks to be sure of mainly two things:

- That we are tracking our model's training logs ->

tf.keras.callbacks.TensorBoard() - That our model is being checkpointed (weights saved after various training milestones) ->

tf.keras.callbacks.ModelCheckpoint()- Checkpointing is also helpful so we can start fine-tuning our model at a particular epoch and revert back to a previous state if fine-tuning offers no benefits

from src.evaluate import KerasMetrics

TASK = '101_food_multiclass_classification'

checkpoint_path = f'../checkpoints/{TASK}/efficientnetb0_feature_extract_all_data/efficientnetb0_feature_extract_all_data.ckpt'

model_checkpoint = tf.keras.callbacks.ModelCheckpoint(checkpoint_path,

monitor='val_accuracy', # save the model weights with best validation accuracy

save_best_only=True, # only save the best weights

save_weights_only=True, # only save model weights (not whole model)

verbose=0)

Setup mixed precision training¶

- Normally, tensors in Tensorflow default to float32 datatype (unless otherwise specified).

- float32 is also known as single-precision-floating-point-format. The 32 means it usually occupies 32 bits in computer memory.

- The GPU has limited memory, therefore it can only handle a number of float32 tensors at the same time.

- This is where mixed precision training comes in. Mixed precision training involves a mix of float16 and float32 tensors to make better use of the GPUs memory.

- float16 means half-precision-floating-point-format. So tensors in 16 bit occupy 16bit in memory.

So if we use mixed precision training, the model will make use of float32 and float16 data types to use less memory wherever possible, and hence run faster (using less memory per tensor means more tensors can be computed on simultaneously)

This makes the performance of modern GPUs (with compute capability score of 7.0+) upto 3x faster. Read Tensorflow mixed precision guide

# Turn on mixed precision training

from tensorflow.keras import mixed_precision

mixed_precision.set_global_policy(policy='mixed_float16')

mixed_precision.global_policy()

<Policy "mixed_float16">

Since the global policy is set to mixed_float16 our model will automatically take advantage of float15 variables where possible and in turn speed up training.

Build feature extraction model¶

- Callbacks:

TensorBoardandModelCheckpoint MixedPrecisiontraining- Feature extraction from

EfficientNetB0

To build the feature extraction model we will:

- Use

EfficientNetB0fromtf.keras.applications. This is pretrained on ImageNet.- Since our output shape i.e. number of prediction classes are different, we will use

include_top=False

- Since our output shape i.e. number of prediction classes are different, we will use

- We will not train/tune the weights of this model and hence will set it

trainable=False - The downstream model will be created using the Keras FUnctional API.

- Optimizer =

Adam, andsparse categorical entropyas the loss function (since our varaibles are not one-hot encoded)

Note: Since we're using mixed precision training, our model needs a separate Activation layer with hardcoded

dtype=float32. This ensures the outputs of our model are returned asfloat32which are more numerically stable thanfloat16datatype (important for loss calculations).

N_CLASSES = len(CLASS_NAMES)

N_CLASSES

101

from tensorflow.keras import layers

from tensorflow.keras.layers.experimental import preprocessing as KerasPreprocessing

model_name = 'efficientnetb0_feature_extract'

# Create the base pretrained model

pretrained_model = tf.keras.applications.EfficientNetB0(include_top=False)

pretrained_model.trainable = False

# Create the downstream model

input_shape = (224, 224, 3)

inputs = layers.Input(shape=input_shape, name='input_layer')

features = pretrained_model(inputs)

pooled = layers.GlobalAveragePooling2D(name='pooling_layer')(features)

outputs = layers.Dense(N_CLASSES)(pooled)

outputs = layers.Activation('softmax', dtype=tf.float32, name='softmax_float32')(outputs)

# Final model

model = tf.keras.models.Model(inputs, outputs, name=model_name)

# Compile the model

model.compile(loss='sparse_categorical_crossentropy',

optimizer=tf.keras.optimizers.Adam(), metrics=['accuracy'])

# Summary

model.summary()

Model: "efficientnetb0_feature_extract" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_layer (InputLayer) [(None, 224, 224, 3)] 0 _________________________________________________________________ efficientnetb0 (Functional) (None, None, None, 1280) 4049571 _________________________________________________________________ pooling_layer (GlobalAverage (None, 1280) 0 _________________________________________________________________ dense_5 (Dense) (None, 101) 129381 _________________________________________________________________ softmax_float32 (Activation) (None, 101) 0 ================================================================= Total params: 4,178,952 Trainable params: 129,381 Non-trainable params: 4,049,571 _________________________________________________________________

Checking layer dtype policies¶

for layer in model.layers:

print(layer.name, layer.trainable, layer.dtype, layer.dtype_policy)

input_layer True float32 <Policy "float32"> efficientnetb0 False float32 <Policy "mixed_float16"> pooling_layer True float32 <Policy "mixed_float16"> dense_5 True float32 <Policy "mixed_float16"> softmax_float32 True float32 <Policy "float32">

Note: A layer can have a dtype of

float32and a dtype policy ofmixed_float16because it store its variables (weights and biases) infloat32(more numerically stable) but it computes infloat16(faster)

Checking for the pretrained_model

for layer in model.layers[1].layers[:20]:

print(layer.name, layer.trainable, layer.dtype, layer.dtype_policy)

input_7 False float32 <Policy "float32"> rescaling_6 False float32 <Policy "mixed_float16"> normalization_6 False float32 <Policy "float32"> stem_conv_pad False float32 <Policy "mixed_float16"> stem_conv False float32 <Policy "mixed_float16"> stem_bn False float32 <Policy "mixed_float16"> stem_activation False float32 <Policy "mixed_float16"> block1a_dwconv False float32 <Policy "mixed_float16"> block1a_bn False float32 <Policy "mixed_float16"> block1a_activation False float32 <Policy "mixed_float16"> block1a_se_squeeze False float32 <Policy "mixed_float16"> block1a_se_reshape False float32 <Policy "mixed_float16"> block1a_se_reduce False float32 <Policy "mixed_float16"> block1a_se_expand False float32 <Policy "mixed_float16"> block1a_se_excite False float32 <Policy "mixed_float16"> block1a_project_conv False float32 <Policy "mixed_float16"> block1a_project_bn False float32 <Policy "mixed_float16"> block2a_expand_conv False float32 <Policy "mixed_float16"> block2a_expand_bn False float32 <Policy "mixed_float16"> block2a_expand_activation False float32 <Policy "mixed_float16">

The mixed precision API automatically causes layers which can benefit from using the mixed_float16 dtype policy to use it. It also prevents layers which shouldn't use it from using it (e.g. the normalization layer at the start of the base model)

Fit the feature extraction model¶

from src.utils import create_tensorboard_callback

from src.visualize import plot_learning_curve

from src.utils import reshape_classification_prediction

history = model.fit(train_data,

epochs=3,

steps_per_epoch=len(train_data),

validation_data=test_data, # Just using it to monitor, o.w. the purpose of validation and test data is different.

validation_steps=len(test_data),

callbacks=[create_tensorboard_callback(experiment='efficientnetb0_feature_extract_all_data', task='101_food_multiclass_classification',

parent_dir='../tensorboard_logs/'), model_checkpoint])

Saving TensorBoard log files to " ../tensorboard_logs/101_food_multiclass_classification/efficientnetb0_feature_extract_all_data/20210618-182607" Epoch 1/3 2368/2368 [==============================] - 190s 80ms/step - loss: 0.9825 - accuracy: 0.7374 - val_loss: 1.0515 - val_accuracy: 0.7172 Epoch 2/3 2368/2368 [==============================] - 188s 79ms/step - loss: 0.9491 - accuracy: 0.7466 - val_loss: 1.0560 - val_accuracy: 0.7146 Epoch 3/3 2368/2368 [==============================] - 192s 80ms/step - loss: 0.9146 - accuracy: 0.7537 - val_loss: 1.0634 - val_accuracy: 0.7133

plot_learning_curve(history, extra_metric='accuracy');

The model keeps on fitting the training set very well, but does not really improve on the validation set. Definitely overfitted, and doesn't seem to learn patterns in a meaningful way.

PREDICTIONS = {}

PREDICTIONS[model_name] = {}

for data, subset in zip([train_data, test_data], ['train', 'test']):

PREDICTIONS[model_name][subset] = reshape_classification_prediction(model.predict(data, verbose=1))

2368/2368 [==============================] - 144s 60ms/step 790/790 [==============================] - 47s 58ms/step

Load and evaluate the checkpoint weights¶

We can load the model's checkpoints by:

- Clone our model (or make exactly same new one)

- Calling

load_weightsmethod on our cloned model and passing the path to the checkpointed weights

cloned_model = tf.keras.models.clone_model(model)

cloned_model.load_weights(checkpoint_path)

<tensorflow.python.training.tracking.util.CheckpointLoadStatus at 0x7f5de3b31fd0>

# Need to recompile the model

cloned_model.compile(loss='sparse_categorical_crossentropy',

optimizer=tf.keras.optimizers.Adam(), metrics=['accuracy'])

from src.utils import check_tfmodel_weights_equality

check_tfmodel_weights_equality(model, cloned_model) # Umm whaaaaaaat?

False

Save the whole models to file¶

save_dir = r'../models/101_food_multiclass_classification/efficientnetb0_feature_extraction_all_data'

model.save(save_dir)

loaded_saved_model = tf.keras.models.load_model(save_dir)