In [ ]:

Copied!

%load_ext autoreload

%autoreload 2

%load_ext autoreload

%autoreload 2

The autoreload extension is already loaded. To reload it, use: %reload_ext autoreload

Fine Tuning¶

Transfer Learning Part 2¶

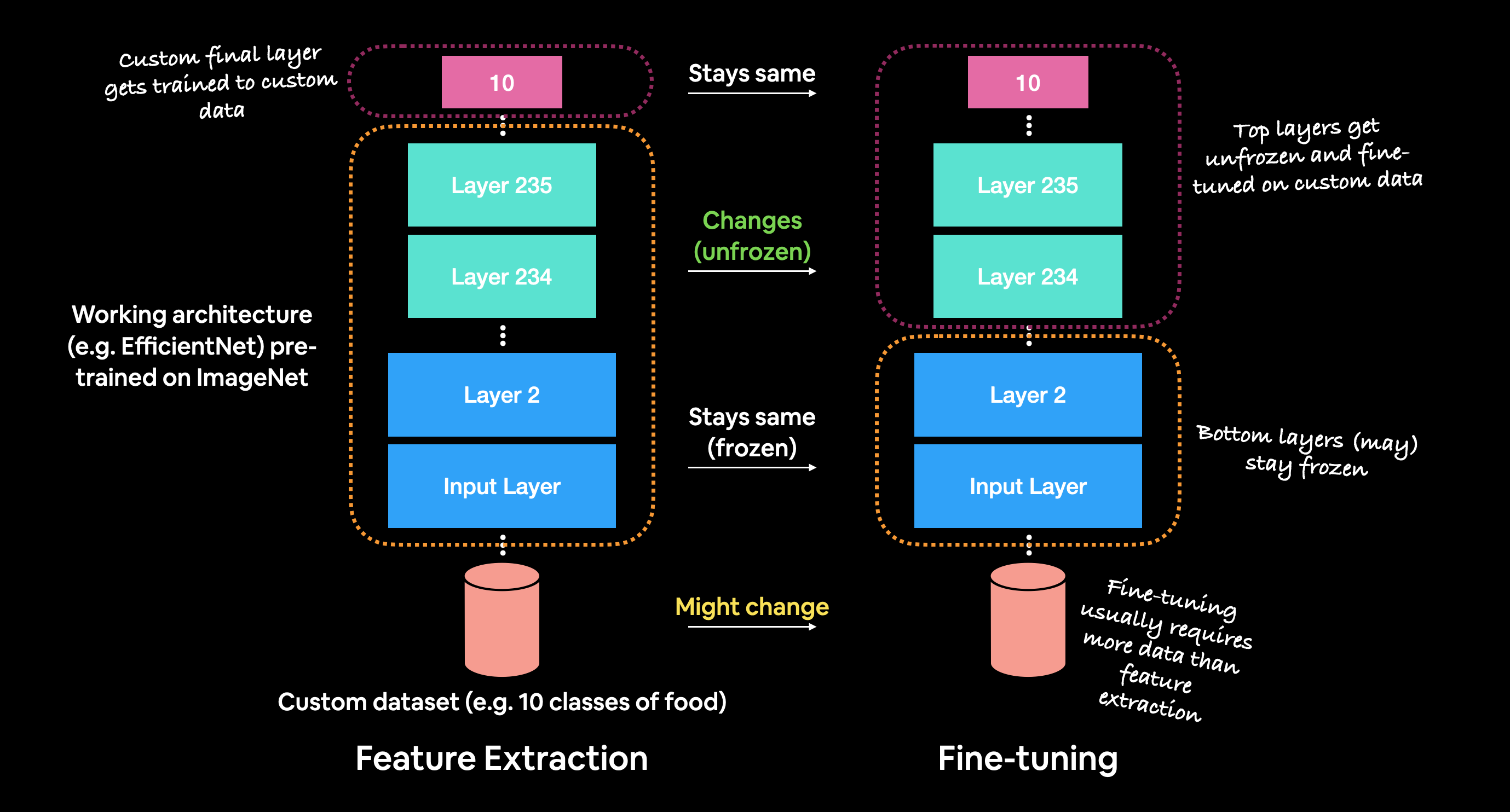

Previous notebook was about using Pretrained models as feature extractors. We transfer the knowledge learned by these models on a similar task and use them directly for our downstream model. This was Transfer Learning - Feature Extraction.

- Used in Low data Volume scenario, or when the pretrained model task is very very similar to our task. E.g. Pretrained model task = "Classifying 1000 types of foods from images", Downstream task = "Classifying 10 fast food dishes".

In this notebook, we will use the pretrained models and fine-tune them (their weights) on our task. This is Transfer Learning - Fine Tuning

- Used in Medium to High volume scenario

- The initial layers of the pretrained models have learned the lower lever features which will still be important as is

- So instead we will adjust the top few layers by gradually unfreezing them

Some imports¶

In [ ]:

Copied!

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from src.image import ClassicImageDataDirectory, ImageDataset

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from src.image import ClassicImageDataDirectory, ImageDataset

Setup all data¶

In [ ]:

Copied!

DATA_DIR = {

'1_percent': '../data/10_food_classes_1_percent',

'10_percent': '../data/10_food_classes_10_percent',

'100_percent': '../data/10_food_classes_all_data',

}

IMAGE_DIM = (224, 224)

IMAGE_DATA_DIRECTORY = {data_pct: ClassicImageDataDirectory(data_dir, IMAGE_DIM, np.uint8) for data_pct, data_dir in DATA_DIR.items()}

DATA_DIR = {

'1_percent': '../data/10_food_classes_1_percent',

'10_percent': '../data/10_food_classes_10_percent',

'100_percent': '../data/10_food_classes_all_data',

}

IMAGE_DIM = (224, 224)

IMAGE_DATA_DIRECTORY = {data_pct: ClassicImageDataDirectory(data_dir, IMAGE_DIM, np.uint8) for data_pct, data_dir in DATA_DIR.items()}

10 Food Classes - Working with less data (10 percent)¶

Load the data¶

In [ ]:

Copied!

data_pct = '10_percent'

data_dir = DATA_DIR[data_pct]

imgdir = IMAGE_DATA_DIRECTORY[data_pct]

imgdir

data_pct = '10_percent'

data_dir = DATA_DIR[data_pct]

imgdir = IMAGE_DATA_DIRECTORY[data_pct]

imgdir

Out[ ]:

<src.image.datadirectory.ClassicImageDataDirectory at 0x15461736048>

Explore the data¶

In [ ]:

Copied!

imgdir.class_names

imgdir.class_names

Out[ ]:

('chicken_curry',

'chicken_wings',

'fried_rice',

'grilled_salmon',

'hamburger',

'ice_cream',

'pizza',

'ramen',

'steak',

'sushi')

In [ ]:

Copied!

imgdir.labelcountdf

imgdir.labelcountdf

Out[ ]:

| label | name | count_train | count_test | |

|---|---|---|---|---|

| 0 | 0 | chicken_curry | 75 | 250 |

| 1 | 1 | chicken_wings | 75 | 250 |

| 2 | 2 | fried_rice | 75 | 250 |

| 3 | 3 | grilled_salmon | 75 | 250 |

| 4 | 4 | hamburger | 75 | 250 |

| 5 | 5 | ice_cream | 75 | 250 |

| 6 | 6 | pizza | 75 | 250 |

| 7 | 7 | ramen | 75 | 250 |

| 8 | 8 | steak | 75 | 250 |

| 9 | 9 | sushi | 75 | 250 |

In [ ]:

Copied!

imgdir.plot_labelcounts();

imgdir.plot_labelcounts();

View Example Images¶

In [ ]:

Copied!

batch = imgdir.load(32)

imgen = next(batch)

imgen.view_random_images(class_names='all');

batch = imgdir.load(32)

imgen = next(batch)

imgen.view_random_images(class_names='all');

Imports¶

In [ ]:

Copied!

import tensorflow as tf

from tensorflow.keras import layers, losses, optimizers

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from src.evaluate import KerasMetrics

from src.visualize import plot_confusion_matrix, plot_keras_model, plot_learning_curve

from sklearn import metrics as skmetrics

import tensorflow as tf

from tensorflow.keras import layers, losses, optimizers

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from src.evaluate import KerasMetrics

from src.visualize import plot_confusion_matrix, plot_keras_model, plot_learning_curve

from sklearn import metrics as skmetrics

EfficientNetB0 - Creating a model using the Keras Functional API¶

In [ ]:

Copied!

from tensorflow.keras.applications import EfficientNetB0, ResNet50V2

from tensorflow.keras.applications import EfficientNetB0, ResNet50V2

Set up initial params¶

In [ ]:

Copied!

DATA_PCT = '10_percent'

CLASSIFICATION_TYPE = 'categorical'

imgdir = IMAGE_DATA_DIRECTORY[DATA_PCT]

IMAGE_DIM = (224, 224)

NUM_CHANNELS = 3

INPUT_SHAPE = (*IMAGE_DIM, NUM_CHANNELS)

N_CLASSES = imgdir.n_classes

BATCH_SIZE = 32

VALIDATION_SPLIT = 0.2

SEED = 42

PRETRAINED_MODEL = 'EfficientNetB0'

DATA_PCT = '10_percent'

CLASSIFICATION_TYPE = 'categorical'

imgdir = IMAGE_DATA_DIRECTORY[DATA_PCT]

IMAGE_DIM = (224, 224)

NUM_CHANNELS = 3

INPUT_SHAPE = (*IMAGE_DIM, NUM_CHANNELS)

N_CLASSES = imgdir.n_classes

BATCH_SIZE = 32

VALIDATION_SPLIT = 0.2

SEED = 42

PRETRAINED_MODEL = 'EfficientNetB0'

Preprocess the data¶

In [ ]:

Copied!

train_datagen = ImageDataGenerator(validation_split=VALIDATION_SPLIT)

test_datagen = ImageDataGenerator()

train_datagen = ImageDataGenerator(validation_split=VALIDATION_SPLIT)

test_datagen = ImageDataGenerator()

Setup the train and test directories¶

In [ ]:

Copied!

train_dir = imgdir.train['dir']

test_dir = imgdir.test['dir']

train_dir = imgdir.train['dir']

test_dir = imgdir.test['dir']

Import the data from directories¶

In [ ]:

Copied!

train_data = train_datagen.flow_from_directory(directory=train_dir, target_size=IMAGE_DIM, class_mode=CLASSIFICATION_TYPE,

batch_size=BATCH_SIZE, subset='training', seed=SEED)

validation_data = train_datagen.flow_from_directory(directory=train_dir, target_size=IMAGE_DIM, class_mode=CLASSIFICATION_TYPE,

batch_size=BATCH_SIZE, subset='validation', seed=SEED)

test_data = test_datagen.flow_from_directory(directory=test_dir, target_size=IMAGE_DIM, class_mode=CLASSIFICATION_TYPE,

batch_size=BATCH_SIZE, shuffle=False)

train_data = train_datagen.flow_from_directory(directory=train_dir, target_size=IMAGE_DIM, class_mode=CLASSIFICATION_TYPE,

batch_size=BATCH_SIZE, subset='training', seed=SEED)

validation_data = train_datagen.flow_from_directory(directory=train_dir, target_size=IMAGE_DIM, class_mode=CLASSIFICATION_TYPE,

batch_size=BATCH_SIZE, subset='validation', seed=SEED)

test_data = test_datagen.flow_from_directory(directory=test_dir, target_size=IMAGE_DIM, class_mode=CLASSIFICATION_TYPE,

batch_size=BATCH_SIZE, shuffle=False)

Found 600 images belonging to 10 classes. Found 150 images belonging to 10 classes. Found 2500 images belonging to 10 classes.

Create the model (using Keras Functional API)¶

In [ ]:

Copied!

# Create the pretrained model (This will download the model)

pretrained_model = EfficientNetB0(include_top=False)

# Make it non trainable

pretrained_model.trainable = False

# input layer

inputs = layers.Input(shape=INPUT_SHAPE, name='input_layer')

print(f'Input shape: {inputs.shape}')

# Scale the input (if model = ResNet50V2) The EfficientNetB0 model in keras.application doesn't require scaling

# inputs = tf.keras.layers.experimental.preprocessing.Rescaling(1/255.)(inputs)

# Extract features from pretrained model

features = pretrained_model(inputs)

print(f'Shape of features extracted from pretrained model: {features.shape}')

# Add a GlobalAveragePool2D layer

pooled = layers.GlobalAvgPool2D(name='global_avg_pooling_layer')(features)

print(f'Shape after global pooling features: {pooled.shape}')

# Now add the final output layer

outputs = layers.Dense(N_CLASSES, activation='softmax', name='output_layer')(pooled)

# Now combine the inputs and outputs into a model

model = tf.keras.Model(inputs=inputs, outputs=outputs)

# Compile the model

model.compile(loss=losses.categorical_crossentropy, optimizer=optimizers.Adam(), metrics=[KerasMetrics.f1, 'accuracy'])

# Summary

model.summary()

# Create the pretrained model (This will download the model)

pretrained_model = EfficientNetB0(include_top=False)

# Make it non trainable

pretrained_model.trainable = False

# input layer

inputs = layers.Input(shape=INPUT_SHAPE, name='input_layer')

print(f'Input shape: {inputs.shape}')

# Scale the input (if model = ResNet50V2) The EfficientNetB0 model in keras.application doesn't require scaling

# inputs = tf.keras.layers.experimental.preprocessing.Rescaling(1/255.)(inputs)

# Extract features from pretrained model

features = pretrained_model(inputs)

print(f'Shape of features extracted from pretrained model: {features.shape}')

# Add a GlobalAveragePool2D layer

pooled = layers.GlobalAvgPool2D(name='global_avg_pooling_layer')(features)

print(f'Shape after global pooling features: {pooled.shape}')

# Now add the final output layer

outputs = layers.Dense(N_CLASSES, activation='softmax', name='output_layer')(pooled)

# Now combine the inputs and outputs into a model

model = tf.keras.Model(inputs=inputs, outputs=outputs)

# Compile the model

model.compile(loss=losses.categorical_crossentropy, optimizer=optimizers.Adam(), metrics=[KerasMetrics.f1, 'accuracy'])

# Summary

model.summary()

Input shape: (None, 224, 224, 3) Shape of features extracted from pretrained model: (None, 7, 7, 1280) Shape after global pooling features: (None, 1280) Model: "model_1" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_layer (InputLayer) [(None, 224, 224, 3)] 0 _________________________________________________________________ efficientnetb0 (Functional) (None, None, None, 1280) 4049571 _________________________________________________________________ global_avg_pooling_layer (Gl (None, 1280) 0 _________________________________________________________________ output_layer (Dense) (None, 10) 12810 ================================================================= Total params: 4,062,381 Trainable params: 12,810 Non-trainable params: 4,049,571 _________________________________________________________________

The output shape of EfficientNetB0 is (None, None, None, 1280), which implies that the output shape is dependent on the input shape. However there maybe a recommended input shape described by the authors of this model.

Fit the model¶

In [ ]:

Copied!

history = model.fit(train_data, steps_per_epoch=len(train_data), validation_data=validation_data, validation_steps=len(validation_data), epochs=10)

history = model.fit(train_data, steps_per_epoch=len(train_data), validation_data=validation_data, validation_steps=len(validation_data), epochs=10)

Epoch 1/10 19/19 [==============================] - 14s 512ms/step - loss: 2.2085 - f1: 0.0000e+00 - accuracy: 0.2123 - val_loss: 1.6883 - val_f1: 0.0121 - val_accuracy: 0.5867 Epoch 2/10 19/19 [==============================] - 6s 337ms/step - loss: 1.3832 - f1: 0.1118 - accuracy: 0.7037 - val_loss: 1.2918 - val_f1: 0.3371 - val_accuracy: 0.6933 Epoch 3/10 19/19 [==============================] - 8s 437ms/step - loss: 0.9837 - f1: 0.5548 - accuracy: 0.7723 - val_loss: 1.1034 - val_f1: 0.5064 - val_accuracy: 0.7333 Epoch 4/10 19/19 [==============================] - 7s 352ms/step - loss: 0.7523 - f1: 0.7338 - accuracy: 0.8296 - val_loss: 1.0094 - val_f1: 0.6135 - val_accuracy: 0.7533 Epoch 5/10 19/19 [==============================] - 8s 425ms/step - loss: 0.6182 - f1: 0.7978 - accuracy: 0.8699 - val_loss: 0.9417 - val_f1: 0.6539 - val_accuracy: 0.7533 Epoch 6/10 19/19 [==============================] - 7s 358ms/step - loss: 0.5287 - f1: 0.8374 - accuracy: 0.8984 - val_loss: 0.9044 - val_f1: 0.6878 - val_accuracy: 0.7467 Epoch 7/10 19/19 [==============================] - 7s 354ms/step - loss: 0.4788 - f1: 0.8490 - accuracy: 0.9104 - val_loss: 0.8780 - val_f1: 0.6889 - val_accuracy: 0.7467 Epoch 8/10 19/19 [==============================] - 7s 366ms/step - loss: 0.4796 - f1: 0.8540 - accuracy: 0.9002 - val_loss: 0.8525 - val_f1: 0.7075 - val_accuracy: 0.7600 Epoch 9/10 19/19 [==============================] - 7s 358ms/step - loss: 0.3983 - f1: 0.8986 - accuracy: 0.9309 - val_loss: 0.8310 - val_f1: 0.7295 - val_accuracy: 0.7733 Epoch 10/10 19/19 [==============================] - 7s 350ms/step - loss: 0.3710 - f1: 0.8997 - accuracy: 0.9159 - val_loss: 0.8190 - val_f1: 0.7399 - val_accuracy: 0.7667

Learning Curve¶

In [ ]:

Copied!

plot_learning_curve(model, extra_metric='f1');

plot_learning_curve(model, extra_metric='f1');

Maybe a bit overfitted, but we got so much better performance with just 10% of the data!

Prediction Evaluation¶

In [ ]:

Copied!

y_test_pred_probs = model.predict(test_data)

y_test_preds = y_test_pred_probs.argmax(axis=1)

y_test_pred_probs = model.predict(test_data)

y_test_preds = y_test_pred_probs.argmax(axis=1)

Classifcation Report¶

In [ ]:

Copied!

print(skmetrics.classification_report(test_data.labels, y_test_preds, target_names=test_data.class_indices))

print(skmetrics.classification_report(test_data.labels, y_test_preds, target_names=test_data.class_indices))

precision recall f1-score support

chicken_curry 0.81 0.70 0.75 250

chicken_wings 0.89 0.81 0.85 250

fried_rice 0.83 0.92 0.87 250

grilled_salmon 0.71 0.74 0.73 250

hamburger 0.87 0.92 0.89 250

ice_cream 0.94 0.82 0.88 250

pizza 0.95 0.96 0.96 250

ramen 0.88 0.89 0.88 250

steak 0.68 0.80 0.74 250

sushi 0.87 0.84 0.85 250

accuracy 0.84 2500

macro avg 0.84 0.84 0.84 2500

weighted avg 0.84 0.84 0.84 2500

Confusion Matrix¶

In [ ]:

Copied!

plot_confusion_matrix(test_data.labels, y_test_preds, classes=test_data.class_indices, figsize=(14, 14), text_size=8);

plot_confusion_matrix(test_data.labels, y_test_preds, classes=test_data.class_indices, figsize=(14, 14), text_size=8);

Layers of the Pretrained Model¶

In [ ]:

Copied!

for layer_number, layer in enumerate(pretrained_model.layers):

print(layer_number, layer.name)

for layer_number, layer in enumerate(pretrained_model.layers):

print(layer_number, layer.name)

0 input_2 1 rescaling_1 2 normalization_1 3 stem_conv_pad 4 stem_conv 5 stem_bn 6 stem_activation 7 block1a_dwconv 8 block1a_bn 9 block1a_activation 10 block1a_se_squeeze 11 block1a_se_reshape 12 block1a_se_reduce 13 block1a_se_expand 14 block1a_se_excite 15 block1a_project_conv 16 block1a_project_bn 17 block2a_expand_conv 18 block2a_expand_bn 19 block2a_expand_activation 20 block2a_dwconv_pad 21 block2a_dwconv 22 block2a_bn 23 block2a_activation 24 block2a_se_squeeze 25 block2a_se_reshape 26 block2a_se_reduce 27 block2a_se_expand 28 block2a_se_excite 29 block2a_project_conv 30 block2a_project_bn 31 block2b_expand_conv 32 block2b_expand_bn 33 block2b_expand_activation 34 block2b_dwconv 35 block2b_bn 36 block2b_activation 37 block2b_se_squeeze 38 block2b_se_reshape 39 block2b_se_reduce 40 block2b_se_expand 41 block2b_se_excite 42 block2b_project_conv 43 block2b_project_bn 44 block2b_drop 45 block2b_add 46 block3a_expand_conv 47 block3a_expand_bn 48 block3a_expand_activation 49 block3a_dwconv_pad 50 block3a_dwconv 51 block3a_bn 52 block3a_activation 53 block3a_se_squeeze 54 block3a_se_reshape 55 block3a_se_reduce 56 block3a_se_expand 57 block3a_se_excite 58 block3a_project_conv 59 block3a_project_bn 60 block3b_expand_conv 61 block3b_expand_bn 62 block3b_expand_activation 63 block3b_dwconv 64 block3b_bn 65 block3b_activation 66 block3b_se_squeeze 67 block3b_se_reshape 68 block3b_se_reduce 69 block3b_se_expand 70 block3b_se_excite 71 block3b_project_conv 72 block3b_project_bn 73 block3b_drop 74 block3b_add 75 block4a_expand_conv 76 block4a_expand_bn 77 block4a_expand_activation 78 block4a_dwconv_pad 79 block4a_dwconv 80 block4a_bn 81 block4a_activation 82 block4a_se_squeeze 83 block4a_se_reshape 84 block4a_se_reduce 85 block4a_se_expand 86 block4a_se_excite 87 block4a_project_conv 88 block4a_project_bn 89 block4b_expand_conv 90 block4b_expand_bn 91 block4b_expand_activation 92 block4b_dwconv 93 block4b_bn 94 block4b_activation 95 block4b_se_squeeze 96 block4b_se_reshape 97 block4b_se_reduce 98 block4b_se_expand 99 block4b_se_excite 100 block4b_project_conv 101 block4b_project_bn 102 block4b_drop 103 block4b_add 104 block4c_expand_conv 105 block4c_expand_bn 106 block4c_expand_activation 107 block4c_dwconv 108 block4c_bn 109 block4c_activation 110 block4c_se_squeeze 111 block4c_se_reshape 112 block4c_se_reduce 113 block4c_se_expand 114 block4c_se_excite 115 block4c_project_conv 116 block4c_project_bn 117 block4c_drop 118 block4c_add 119 block5a_expand_conv 120 block5a_expand_bn 121 block5a_expand_activation 122 block5a_dwconv 123 block5a_bn 124 block5a_activation 125 block5a_se_squeeze 126 block5a_se_reshape 127 block5a_se_reduce 128 block5a_se_expand 129 block5a_se_excite 130 block5a_project_conv 131 block5a_project_bn 132 block5b_expand_conv 133 block5b_expand_bn 134 block5b_expand_activation 135 block5b_dwconv 136 block5b_bn 137 block5b_activation 138 block5b_se_squeeze 139 block5b_se_reshape 140 block5b_se_reduce 141 block5b_se_expand 142 block5b_se_excite 143 block5b_project_conv 144 block5b_project_bn 145 block5b_drop 146 block5b_add 147 block5c_expand_conv 148 block5c_expand_bn 149 block5c_expand_activation 150 block5c_dwconv 151 block5c_bn 152 block5c_activation 153 block5c_se_squeeze 154 block5c_se_reshape 155 block5c_se_reduce 156 block5c_se_expand 157 block5c_se_excite 158 block5c_project_conv 159 block5c_project_bn 160 block5c_drop 161 block5c_add 162 block6a_expand_conv 163 block6a_expand_bn 164 block6a_expand_activation 165 block6a_dwconv_pad 166 block6a_dwconv 167 block6a_bn 168 block6a_activation 169 block6a_se_squeeze 170 block6a_se_reshape 171 block6a_se_reduce 172 block6a_se_expand 173 block6a_se_excite 174 block6a_project_conv 175 block6a_project_bn 176 block6b_expand_conv 177 block6b_expand_bn 178 block6b_expand_activation 179 block6b_dwconv 180 block6b_bn 181 block6b_activation 182 block6b_se_squeeze 183 block6b_se_reshape 184 block6b_se_reduce 185 block6b_se_expand 186 block6b_se_excite 187 block6b_project_conv 188 block6b_project_bn 189 block6b_drop 190 block6b_add 191 block6c_expand_conv 192 block6c_expand_bn 193 block6c_expand_activation 194 block6c_dwconv 195 block6c_bn 196 block6c_activation 197 block6c_se_squeeze 198 block6c_se_reshape 199 block6c_se_reduce 200 block6c_se_expand 201 block6c_se_excite 202 block6c_project_conv 203 block6c_project_bn 204 block6c_drop 205 block6c_add 206 block6d_expand_conv 207 block6d_expand_bn 208 block6d_expand_activation 209 block6d_dwconv 210 block6d_bn 211 block6d_activation 212 block6d_se_squeeze 213 block6d_se_reshape 214 block6d_se_reduce 215 block6d_se_expand 216 block6d_se_excite 217 block6d_project_conv 218 block6d_project_bn 219 block6d_drop 220 block6d_add 221 block7a_expand_conv 222 block7a_expand_bn 223 block7a_expand_activation 224 block7a_dwconv 225 block7a_bn 226 block7a_activation 227 block7a_se_squeeze 228 block7a_se_reshape 229 block7a_se_reduce 230 block7a_se_expand 231 block7a_se_excite 232 block7a_project_conv 233 block7a_project_bn 234 top_conv 235 top_bn 236 top_activation

In [ ]:

Copied!

pretrained_model.summary()

pretrained_model.summary()

Model: "efficientnetb0"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_2 (InputLayer) [(None, None, None, 0

__________________________________________________________________________________________________

rescaling_1 (Rescaling) (None, None, None, 3 0 input_2[0][0]

__________________________________________________________________________________________________

normalization_1 (Normalization) (None, None, None, 3 7 rescaling_1[0][0]

__________________________________________________________________________________________________

stem_conv_pad (ZeroPadding2D) (None, None, None, 3 0 normalization_1[0][0]

__________________________________________________________________________________________________

stem_conv (Conv2D) (None, None, None, 3 864 stem_conv_pad[0][0]

__________________________________________________________________________________________________

stem_bn (BatchNormalization) (None, None, None, 3 128 stem_conv[0][0]

__________________________________________________________________________________________________

stem_activation (Activation) (None, None, None, 3 0 stem_bn[0][0]

__________________________________________________________________________________________________

block1a_dwconv (DepthwiseConv2D (None, None, None, 3 288 stem_activation[0][0]

__________________________________________________________________________________________________

block1a_bn (BatchNormalization) (None, None, None, 3 128 block1a_dwconv[0][0]

__________________________________________________________________________________________________

block1a_activation (Activation) (None, None, None, 3 0 block1a_bn[0][0]

__________________________________________________________________________________________________

block1a_se_squeeze (GlobalAvera (None, 32) 0 block1a_activation[0][0]

__________________________________________________________________________________________________

block1a_se_reshape (Reshape) (None, 1, 1, 32) 0 block1a_se_squeeze[0][0]

__________________________________________________________________________________________________

block1a_se_reduce (Conv2D) (None, 1, 1, 8) 264 block1a_se_reshape[0][0]

__________________________________________________________________________________________________

block1a_se_expand (Conv2D) (None, 1, 1, 32) 288 block1a_se_reduce[0][0]

__________________________________________________________________________________________________

block1a_se_excite (Multiply) (None, None, None, 3 0 block1a_activation[0][0]

block1a_se_expand[0][0]

__________________________________________________________________________________________________

block1a_project_conv (Conv2D) (None, None, None, 1 512 block1a_se_excite[0][0]

__________________________________________________________________________________________________

block1a_project_bn (BatchNormal (None, None, None, 1 64 block1a_project_conv[0][0]

__________________________________________________________________________________________________

block2a_expand_conv (Conv2D) (None, None, None, 9 1536 block1a_project_bn[0][0]

__________________________________________________________________________________________________

block2a_expand_bn (BatchNormali (None, None, None, 9 384 block2a_expand_conv[0][0]

__________________________________________________________________________________________________

block2a_expand_activation (Acti (None, None, None, 9 0 block2a_expand_bn[0][0]

__________________________________________________________________________________________________

block2a_dwconv_pad (ZeroPadding (None, None, None, 9 0 block2a_expand_activation[0][0]

__________________________________________________________________________________________________

block2a_dwconv (DepthwiseConv2D (None, None, None, 9 864 block2a_dwconv_pad[0][0]

__________________________________________________________________________________________________

block2a_bn (BatchNormalization) (None, None, None, 9 384 block2a_dwconv[0][0]

__________________________________________________________________________________________________

block2a_activation (Activation) (None, None, None, 9 0 block2a_bn[0][0]

__________________________________________________________________________________________________

block2a_se_squeeze (GlobalAvera (None, 96) 0 block2a_activation[0][0]

__________________________________________________________________________________________________

block2a_se_reshape (Reshape) (None, 1, 1, 96) 0 block2a_se_squeeze[0][0]

__________________________________________________________________________________________________

block2a_se_reduce (Conv2D) (None, 1, 1, 4) 388 block2a_se_reshape[0][0]

__________________________________________________________________________________________________

block2a_se_expand (Conv2D) (None, 1, 1, 96) 480 block2a_se_reduce[0][0]

__________________________________________________________________________________________________

block2a_se_excite (Multiply) (None, None, None, 9 0 block2a_activation[0][0]

block2a_se_expand[0][0]

__________________________________________________________________________________________________

block2a_project_conv (Conv2D) (None, None, None, 2 2304 block2a_se_excite[0][0]

__________________________________________________________________________________________________

block2a_project_bn (BatchNormal (None, None, None, 2 96 block2a_project_conv[0][0]

__________________________________________________________________________________________________

block2b_expand_conv (Conv2D) (None, None, None, 1 3456 block2a_project_bn[0][0]

__________________________________________________________________________________________________

block2b_expand_bn (BatchNormali (None, None, None, 1 576 block2b_expand_conv[0][0]

__________________________________________________________________________________________________

block2b_expand_activation (Acti (None, None, None, 1 0 block2b_expand_bn[0][0]

__________________________________________________________________________________________________

block2b_dwconv (DepthwiseConv2D (None, None, None, 1 1296 block2b_expand_activation[0][0]

__________________________________________________________________________________________________

block2b_bn (BatchNormalization) (None, None, None, 1 576 block2b_dwconv[0][0]

__________________________________________________________________________________________________

block2b_activation (Activation) (None, None, None, 1 0 block2b_bn[0][0]

__________________________________________________________________________________________________

block2b_se_squeeze (GlobalAvera (None, 144) 0 block2b_activation[0][0]

__________________________________________________________________________________________________

block2b_se_reshape (Reshape) (None, 1, 1, 144) 0 block2b_se_squeeze[0][0]

__________________________________________________________________________________________________

block2b_se_reduce (Conv2D) (None, 1, 1, 6) 870 block2b_se_reshape[0][0]

__________________________________________________________________________________________________

block2b_se_expand (Conv2D) (None, 1, 1, 144) 1008 block2b_se_reduce[0][0]

__________________________________________________________________________________________________

block2b_se_excite (Multiply) (None, None, None, 1 0 block2b_activation[0][0]

block2b_se_expand[0][0]

__________________________________________________________________________________________________

block2b_project_conv (Conv2D) (None, None, None, 2 3456 block2b_se_excite[0][0]

__________________________________________________________________________________________________

block2b_project_bn (BatchNormal (None, None, None, 2 96 block2b_project_conv[0][0]

__________________________________________________________________________________________________

block2b_drop (Dropout) (None, None, None, 2 0 block2b_project_bn[0][0]

__________________________________________________________________________________________________

block2b_add (Add) (None, None, None, 2 0 block2b_drop[0][0]

block2a_project_bn[0][0]

__________________________________________________________________________________________________

block3a_expand_conv (Conv2D) (None, None, None, 1 3456 block2b_add[0][0]

__________________________________________________________________________________________________

block3a_expand_bn (BatchNormali (None, None, None, 1 576 block3a_expand_conv[0][0]

__________________________________________________________________________________________________

block3a_expand_activation (Acti (None, None, None, 1 0 block3a_expand_bn[0][0]

__________________________________________________________________________________________________

block3a_dwconv_pad (ZeroPadding (None, None, None, 1 0 block3a_expand_activation[0][0]

__________________________________________________________________________________________________

block3a_dwconv (DepthwiseConv2D (None, None, None, 1 3600 block3a_dwconv_pad[0][0]

__________________________________________________________________________________________________

block3a_bn (BatchNormalization) (None, None, None, 1 576 block3a_dwconv[0][0]

__________________________________________________________________________________________________

block3a_activation (Activation) (None, None, None, 1 0 block3a_bn[0][0]

__________________________________________________________________________________________________

block3a_se_squeeze (GlobalAvera (None, 144) 0 block3a_activation[0][0]

__________________________________________________________________________________________________

block3a_se_reshape (Reshape) (None, 1, 1, 144) 0 block3a_se_squeeze[0][0]

__________________________________________________________________________________________________

block3a_se_reduce (Conv2D) (None, 1, 1, 6) 870 block3a_se_reshape[0][0]

__________________________________________________________________________________________________

block3a_se_expand (Conv2D) (None, 1, 1, 144) 1008 block3a_se_reduce[0][0]

__________________________________________________________________________________________________

block3a_se_excite (Multiply) (None, None, None, 1 0 block3a_activation[0][0]

block3a_se_expand[0][0]

__________________________________________________________________________________________________

block3a_project_conv (Conv2D) (None, None, None, 4 5760 block3a_se_excite[0][0]

__________________________________________________________________________________________________

block3a_project_bn (BatchNormal (None, None, None, 4 160 block3a_project_conv[0][0]

__________________________________________________________________________________________________

block3b_expand_conv (Conv2D) (None, None, None, 2 9600 block3a_project_bn[0][0]

__________________________________________________________________________________________________

block3b_expand_bn (BatchNormali (None, None, None, 2 960 block3b_expand_conv[0][0]

__________________________________________________________________________________________________

block3b_expand_activation (Acti (None, None, None, 2 0 block3b_expand_bn[0][0]

__________________________________________________________________________________________________

block3b_dwconv (DepthwiseConv2D (None, None, None, 2 6000 block3b_expand_activation[0][0]

__________________________________________________________________________________________________

block3b_bn (BatchNormalization) (None, None, None, 2 960 block3b_dwconv[0][0]

__________________________________________________________________________________________________

block3b_activation (Activation) (None, None, None, 2 0 block3b_bn[0][0]

__________________________________________________________________________________________________

block3b_se_squeeze (GlobalAvera (None, 240) 0 block3b_activation[0][0]

__________________________________________________________________________________________________

block3b_se_reshape (Reshape) (None, 1, 1, 240) 0 block3b_se_squeeze[0][0]

__________________________________________________________________________________________________

block3b_se_reduce (Conv2D) (None, 1, 1, 10) 2410 block3b_se_reshape[0][0]

__________________________________________________________________________________________________

block3b_se_expand (Conv2D) (None, 1, 1, 240) 2640 block3b_se_reduce[0][0]

__________________________________________________________________________________________________

block3b_se_excite (Multiply) (None, None, None, 2 0 block3b_activation[0][0]

block3b_se_expand[0][0]

__________________________________________________________________________________________________

block3b_project_conv (Conv2D) (None, None, None, 4 9600 block3b_se_excite[0][0]

__________________________________________________________________________________________________

block3b_project_bn (BatchNormal (None, None, None, 4 160 block3b_project_conv[0][0]

__________________________________________________________________________________________________

block3b_drop (Dropout) (None, None, None, 4 0 block3b_project_bn[0][0]

__________________________________________________________________________________________________

block3b_add (Add) (None, None, None, 4 0 block3b_drop[0][0]

block3a_project_bn[0][0]

__________________________________________________________________________________________________

block4a_expand_conv (Conv2D) (None, None, None, 2 9600 block3b_add[0][0]

__________________________________________________________________________________________________

block4a_expand_bn (BatchNormali (None, None, None, 2 960 block4a_expand_conv[0][0]

__________________________________________________________________________________________________

block4a_expand_activation (Acti (None, None, None, 2 0 block4a_expand_bn[0][0]

__________________________________________________________________________________________________

block4a_dwconv_pad (ZeroPadding (None, None, None, 2 0 block4a_expand_activation[0][0]

__________________________________________________________________________________________________

block4a_dwconv (DepthwiseConv2D (None, None, None, 2 2160 block4a_dwconv_pad[0][0]

__________________________________________________________________________________________________

block4a_bn (BatchNormalization) (None, None, None, 2 960 block4a_dwconv[0][0]

__________________________________________________________________________________________________

block4a_activation (Activation) (None, None, None, 2 0 block4a_bn[0][0]

__________________________________________________________________________________________________

block4a_se_squeeze (GlobalAvera (None, 240) 0 block4a_activation[0][0]

__________________________________________________________________________________________________

block4a_se_reshape (Reshape) (None, 1, 1, 240) 0 block4a_se_squeeze[0][0]

__________________________________________________________________________________________________

block4a_se_reduce (Conv2D) (None, 1, 1, 10) 2410 block4a_se_reshape[0][0]

__________________________________________________________________________________________________

block4a_se_expand (Conv2D) (None, 1, 1, 240) 2640 block4a_se_reduce[0][0]

__________________________________________________________________________________________________

block4a_se_excite (Multiply) (None, None, None, 2 0 block4a_activation[0][0]

block4a_se_expand[0][0]

__________________________________________________________________________________________________

block4a_project_conv (Conv2D) (None, None, None, 8 19200 block4a_se_excite[0][0]

__________________________________________________________________________________________________

block4a_project_bn (BatchNormal (None, None, None, 8 320 block4a_project_conv[0][0]

__________________________________________________________________________________________________

block4b_expand_conv (Conv2D) (None, None, None, 4 38400 block4a_project_bn[0][0]

__________________________________________________________________________________________________

block4b_expand_bn (BatchNormali (None, None, None, 4 1920 block4b_expand_conv[0][0]

__________________________________________________________________________________________________

block4b_expand_activation (Acti (None, None, None, 4 0 block4b_expand_bn[0][0]

__________________________________________________________________________________________________

block4b_dwconv (DepthwiseConv2D (None, None, None, 4 4320 block4b_expand_activation[0][0]

__________________________________________________________________________________________________

block4b_bn (BatchNormalization) (None, None, None, 4 1920 block4b_dwconv[0][0]

__________________________________________________________________________________________________

block4b_activation (Activation) (None, None, None, 4 0 block4b_bn[0][0]

__________________________________________________________________________________________________

block4b_se_squeeze (GlobalAvera (None, 480) 0 block4b_activation[0][0]

__________________________________________________________________________________________________

block4b_se_reshape (Reshape) (None, 1, 1, 480) 0 block4b_se_squeeze[0][0]

__________________________________________________________________________________________________

block4b_se_reduce (Conv2D) (None, 1, 1, 20) 9620 block4b_se_reshape[0][0]

__________________________________________________________________________________________________

block4b_se_expand (Conv2D) (None, 1, 1, 480) 10080 block4b_se_reduce[0][0]

__________________________________________________________________________________________________

block4b_se_excite (Multiply) (None, None, None, 4 0 block4b_activation[0][0]

block4b_se_expand[0][0]

__________________________________________________________________________________________________

block4b_project_conv (Conv2D) (None, None, None, 8 38400 block4b_se_excite[0][0]

__________________________________________________________________________________________________

block4b_project_bn (BatchNormal (None, None, None, 8 320 block4b_project_conv[0][0]

__________________________________________________________________________________________________

block4b_drop (Dropout) (None, None, None, 8 0 block4b_project_bn[0][0]

__________________________________________________________________________________________________

block4b_add (Add) (None, None, None, 8 0 block4b_drop[0][0]

block4a_project_bn[0][0]

__________________________________________________________________________________________________

block4c_expand_conv (Conv2D) (None, None, None, 4 38400 block4b_add[0][0]

__________________________________________________________________________________________________

block4c_expand_bn (BatchNormali (None, None, None, 4 1920 block4c_expand_conv[0][0]

__________________________________________________________________________________________________

block4c_expand_activation (Acti (None, None, None, 4 0 block4c_expand_bn[0][0]

__________________________________________________________________________________________________

block4c_dwconv (DepthwiseConv2D (None, None, None, 4 4320 block4c_expand_activation[0][0]

__________________________________________________________________________________________________

block4c_bn (BatchNormalization) (None, None, None, 4 1920 block4c_dwconv[0][0]

__________________________________________________________________________________________________

block4c_activation (Activation) (None, None, None, 4 0 block4c_bn[0][0]

__________________________________________________________________________________________________

block4c_se_squeeze (GlobalAvera (None, 480) 0 block4c_activation[0][0]

__________________________________________________________________________________________________

block4c_se_reshape (Reshape) (None, 1, 1, 480) 0 block4c_se_squeeze[0][0]

__________________________________________________________________________________________________

block4c_se_reduce (Conv2D) (None, 1, 1, 20) 9620 block4c_se_reshape[0][0]

__________________________________________________________________________________________________

block4c_se_expand (Conv2D) (None, 1, 1, 480) 10080 block4c_se_reduce[0][0]

__________________________________________________________________________________________________

block4c_se_excite (Multiply) (None, None, None, 4 0 block4c_activation[0][0]

block4c_se_expand[0][0]

__________________________________________________________________________________________________

block4c_project_conv (Conv2D) (None, None, None, 8 38400 block4c_se_excite[0][0]

__________________________________________________________________________________________________

block4c_project_bn (BatchNormal (None, None, None, 8 320 block4c_project_conv[0][0]

__________________________________________________________________________________________________

block4c_drop (Dropout) (None, None, None, 8 0 block4c_project_bn[0][0]

__________________________________________________________________________________________________

block4c_add (Add) (None, None, None, 8 0 block4c_drop[0][0]

block4b_add[0][0]

__________________________________________________________________________________________________

block5a_expand_conv (Conv2D) (None, None, None, 4 38400 block4c_add[0][0]

__________________________________________________________________________________________________

block5a_expand_bn (BatchNormali (None, None, None, 4 1920 block5a_expand_conv[0][0]

__________________________________________________________________________________________________

block5a_expand_activation (Acti (None, None, None, 4 0 block5a_expand_bn[0][0]

__________________________________________________________________________________________________

block5a_dwconv (DepthwiseConv2D (None, None, None, 4 12000 block5a_expand_activation[0][0]

__________________________________________________________________________________________________

block5a_bn (BatchNormalization) (None, None, None, 4 1920 block5a_dwconv[0][0]

__________________________________________________________________________________________________

block5a_activation (Activation) (None, None, None, 4 0 block5a_bn[0][0]

__________________________________________________________________________________________________

block5a_se_squeeze (GlobalAvera (None, 480) 0 block5a_activation[0][0]

__________________________________________________________________________________________________

block5a_se_reshape (Reshape) (None, 1, 1, 480) 0 block5a_se_squeeze[0][0]

__________________________________________________________________________________________________

block5a_se_reduce (Conv2D) (None, 1, 1, 20) 9620 block5a_se_reshape[0][0]

__________________________________________________________________________________________________

block5a_se_expand (Conv2D) (None, 1, 1, 480) 10080 block5a_se_reduce[0][0]

__________________________________________________________________________________________________

block5a_se_excite (Multiply) (None, None, None, 4 0 block5a_activation[0][0]

block5a_se_expand[0][0]

__________________________________________________________________________________________________

block5a_project_conv (Conv2D) (None, None, None, 1 53760 block5a_se_excite[0][0]

__________________________________________________________________________________________________

block5a_project_bn (BatchNormal (None, None, None, 1 448 block5a_project_conv[0][0]

__________________________________________________________________________________________________

block5b_expand_conv (Conv2D) (None, None, None, 6 75264 block5a_project_bn[0][0]

__________________________________________________________________________________________________

block5b_expand_bn (BatchNormali (None, None, None, 6 2688 block5b_expand_conv[0][0]

__________________________________________________________________________________________________

block5b_expand_activation (Acti (None, None, None, 6 0 block5b_expand_bn[0][0]

__________________________________________________________________________________________________

block5b_dwconv (DepthwiseConv2D (None, None, None, 6 16800 block5b_expand_activation[0][0]

__________________________________________________________________________________________________

block5b_bn (BatchNormalization) (None, None, None, 6 2688 block5b_dwconv[0][0]

__________________________________________________________________________________________________

block5b_activation (Activation) (None, None, None, 6 0 block5b_bn[0][0]

__________________________________________________________________________________________________

block5b_se_squeeze (GlobalAvera (None, 672) 0 block5b_activation[0][0]

__________________________________________________________________________________________________

block5b_se_reshape (Reshape) (None, 1, 1, 672) 0 block5b_se_squeeze[0][0]

__________________________________________________________________________________________________

block5b_se_reduce (Conv2D) (None, 1, 1, 28) 18844 block5b_se_reshape[0][0]

__________________________________________________________________________________________________

block5b_se_expand (Conv2D) (None, 1, 1, 672) 19488 block5b_se_reduce[0][0]

__________________________________________________________________________________________________

block5b_se_excite (Multiply) (None, None, None, 6 0 block5b_activation[0][0]

block5b_se_expand[0][0]

__________________________________________________________________________________________________

block5b_project_conv (Conv2D) (None, None, None, 1 75264 block5b_se_excite[0][0]

__________________________________________________________________________________________________

block5b_project_bn (BatchNormal (None, None, None, 1 448 block5b_project_conv[0][0]

__________________________________________________________________________________________________

block5b_drop (Dropout) (None, None, None, 1 0 block5b_project_bn[0][0]

__________________________________________________________________________________________________

block5b_add (Add) (None, None, None, 1 0 block5b_drop[0][0]

block5a_project_bn[0][0]

__________________________________________________________________________________________________

block5c_expand_conv (Conv2D) (None, None, None, 6 75264 block5b_add[0][0]

__________________________________________________________________________________________________

block5c_expand_bn (BatchNormali (None, None, None, 6 2688 block5c_expand_conv[0][0]

__________________________________________________________________________________________________

block5c_expand_activation (Acti (None, None, None, 6 0 block5c_expand_bn[0][0]

__________________________________________________________________________________________________

block5c_dwconv (DepthwiseConv2D (None, None, None, 6 16800 block5c_expand_activation[0][0]

__________________________________________________________________________________________________

block5c_bn (BatchNormalization) (None, None, None, 6 2688 block5c_dwconv[0][0]

__________________________________________________________________________________________________

block5c_activation (Activation) (None, None, None, 6 0 block5c_bn[0][0]

__________________________________________________________________________________________________

block5c_se_squeeze (GlobalAvera (None, 672) 0 block5c_activation[0][0]

__________________________________________________________________________________________________

block5c_se_reshape (Reshape) (None, 1, 1, 672) 0 block5c_se_squeeze[0][0]

__________________________________________________________________________________________________

block5c_se_reduce (Conv2D) (None, 1, 1, 28) 18844 block5c_se_reshape[0][0]

__________________________________________________________________________________________________

block5c_se_expand (Conv2D) (None, 1, 1, 672) 19488 block5c_se_reduce[0][0]

__________________________________________________________________________________________________

block5c_se_excite (Multiply) (None, None, None, 6 0 block5c_activation[0][0]

block5c_se_expand[0][0]

__________________________________________________________________________________________________

block5c_project_conv (Conv2D) (None, None, None, 1 75264 block5c_se_excite[0][0]

__________________________________________________________________________________________________

block5c_project_bn (BatchNormal (None, None, None, 1 448 block5c_project_conv[0][0]

__________________________________________________________________________________________________

block5c_drop (Dropout) (None, None, None, 1 0 block5c_project_bn[0][0]

__________________________________________________________________________________________________

block5c_add (Add) (None, None, None, 1 0 block5c_drop[0][0]

block5b_add[0][0]

__________________________________________________________________________________________________

block6a_expand_conv (Conv2D) (None, None, None, 6 75264 block5c_add[0][0]

__________________________________________________________________________________________________

block6a_expand_bn (BatchNormali (None, None, None, 6 2688 block6a_expand_conv[0][0]

__________________________________________________________________________________________________

block6a_expand_activation (Acti (None, None, None, 6 0 block6a_expand_bn[0][0]

__________________________________________________________________________________________________

block6a_dwconv_pad (ZeroPadding (None, None, None, 6 0 block6a_expand_activation[0][0]

__________________________________________________________________________________________________

block6a_dwconv (DepthwiseConv2D (None, None, None, 6 16800 block6a_dwconv_pad[0][0]

__________________________________________________________________________________________________

block6a_bn (BatchNormalization) (None, None, None, 6 2688 block6a_dwconv[0][0]

__________________________________________________________________________________________________

block6a_activation (Activation) (None, None, None, 6 0 block6a_bn[0][0]

__________________________________________________________________________________________________

block6a_se_squeeze (GlobalAvera (None, 672) 0 block6a_activation[0][0]

__________________________________________________________________________________________________

block6a_se_reshape (Reshape) (None, 1, 1, 672) 0 block6a_se_squeeze[0][0]

__________________________________________________________________________________________________

block6a_se_reduce (Conv2D) (None, 1, 1, 28) 18844 block6a_se_reshape[0][0]

__________________________________________________________________________________________________

block6a_se_expand (Conv2D) (None, 1, 1, 672) 19488 block6a_se_reduce[0][0]

__________________________________________________________________________________________________

block6a_se_excite (Multiply) (None, None, None, 6 0 block6a_activation[0][0]

block6a_se_expand[0][0]

__________________________________________________________________________________________________

block6a_project_conv (Conv2D) (None, None, None, 1 129024 block6a_se_excite[0][0]

__________________________________________________________________________________________________

block6a_project_bn (BatchNormal (None, None, None, 1 768 block6a_project_conv[0][0]

__________________________________________________________________________________________________

block6b_expand_conv (Conv2D) (None, None, None, 1 221184 block6a_project_bn[0][0]

__________________________________________________________________________________________________

block6b_expand_bn (BatchNormali (None, None, None, 1 4608 block6b_expand_conv[0][0]

__________________________________________________________________________________________________

block6b_expand_activation (Acti (None, None, None, 1 0 block6b_expand_bn[0][0]

__________________________________________________________________________________________________

block6b_dwconv (DepthwiseConv2D (None, None, None, 1 28800 block6b_expand_activation[0][0]

__________________________________________________________________________________________________

block6b_bn (BatchNormalization) (None, None, None, 1 4608 block6b_dwconv[0][0]

__________________________________________________________________________________________________

block6b_activation (Activation) (None, None, None, 1 0 block6b_bn[0][0]

__________________________________________________________________________________________________

block6b_se_squeeze (GlobalAvera (None, 1152) 0 block6b_activation[0][0]

__________________________________________________________________________________________________

block6b_se_reshape (Reshape) (None, 1, 1, 1152) 0 block6b_se_squeeze[0][0]

__________________________________________________________________________________________________

block6b_se_reduce (Conv2D) (None, 1, 1, 48) 55344 block6b_se_reshape[0][0]

__________________________________________________________________________________________________

block6b_se_expand (Conv2D) (None, 1, 1, 1152) 56448 block6b_se_reduce[0][0]

__________________________________________________________________________________________________

block6b_se_excite (Multiply) (None, None, None, 1 0 block6b_activation[0][0]

block6b_se_expand[0][0]

__________________________________________________________________________________________________

block6b_project_conv (Conv2D) (None, None, None, 1 221184 block6b_se_excite[0][0]

__________________________________________________________________________________________________

block6b_project_bn (BatchNormal (None, None, None, 1 768 block6b_project_conv[0][0]

__________________________________________________________________________________________________

block6b_drop (Dropout) (None, None, None, 1 0 block6b_project_bn[0][0]

__________________________________________________________________________________________________

block6b_add (Add) (None, None, None, 1 0 block6b_drop[0][0]

block6a_project_bn[0][0]

__________________________________________________________________________________________________

block6c_expand_conv (Conv2D) (None, None, None, 1 221184 block6b_add[0][0]

__________________________________________________________________________________________________

block6c_expand_bn (BatchNormali (None, None, None, 1 4608 block6c_expand_conv[0][0]

__________________________________________________________________________________________________

block6c_expand_activation (Acti (None, None, None, 1 0 block6c_expand_bn[0][0]

__________________________________________________________________________________________________

block6c_dwconv (DepthwiseConv2D (None, None, None, 1 28800 block6c_expand_activation[0][0]

__________________________________________________________________________________________________

block6c_bn (BatchNormalization) (None, None, None, 1 4608 block6c_dwconv[0][0]

__________________________________________________________________________________________________

block6c_activation (Activation) (None, None, None, 1 0 block6c_bn[0][0]

__________________________________________________________________________________________________

block6c_se_squeeze (GlobalAvera (None, 1152) 0 block6c_activation[0][0]

__________________________________________________________________________________________________

block6c_se_reshape (Reshape) (None, 1, 1, 1152) 0 block6c_se_squeeze[0][0]

__________________________________________________________________________________________________

block6c_se_reduce (Conv2D) (None, 1, 1, 48) 55344 block6c_se_reshape[0][0]

__________________________________________________________________________________________________

block6c_se_expand (Conv2D) (None, 1, 1, 1152) 56448 block6c_se_reduce[0][0]

__________________________________________________________________________________________________

block6c_se_excite (Multiply) (None, None, None, 1 0 block6c_activation[0][0]

block6c_se_expand[0][0]

__________________________________________________________________________________________________

block6c_project_conv (Conv2D) (None, None, None, 1 221184 block6c_se_excite[0][0]

__________________________________________________________________________________________________

block6c_project_bn (BatchNormal (None, None, None, 1 768 block6c_project_conv[0][0]

__________________________________________________________________________________________________

block6c_drop (Dropout) (None, None, None, 1 0 block6c_project_bn[0][0]

__________________________________________________________________________________________________

block6c_add (Add) (None, None, None, 1 0 block6c_drop[0][0]

block6b_add[0][0]

__________________________________________________________________________________________________

block6d_expand_conv (Conv2D) (None, None, None, 1 221184 block6c_add[0][0]

__________________________________________________________________________________________________

block6d_expand_bn (BatchNormali (None, None, None, 1 4608 block6d_expand_conv[0][0]

__________________________________________________________________________________________________

block6d_expand_activation (Acti (None, None, None, 1 0 block6d_expand_bn[0][0]

__________________________________________________________________________________________________

block6d_dwconv (DepthwiseConv2D (None, None, None, 1 28800 block6d_expand_activation[0][0]

__________________________________________________________________________________________________

block6d_bn (BatchNormalization) (None, None, None, 1 4608 block6d_dwconv[0][0]

__________________________________________________________________________________________________

block6d_activation (Activation) (None, None, None, 1 0 block6d_bn[0][0]

__________________________________________________________________________________________________

block6d_se_squeeze (GlobalAvera (None, 1152) 0 block6d_activation[0][0]

__________________________________________________________________________________________________

block6d_se_reshape (Reshape) (None, 1, 1, 1152) 0 block6d_se_squeeze[0][0]

__________________________________________________________________________________________________

block6d_se_reduce (Conv2D) (None, 1, 1, 48) 55344 block6d_se_reshape[0][0]

__________________________________________________________________________________________________

block6d_se_expand (Conv2D) (None, 1, 1, 1152) 56448 block6d_se_reduce[0][0]

__________________________________________________________________________________________________

block6d_se_excite (Multiply) (None, None, None, 1 0 block6d_activation[0][0]

block6d_se_expand[0][0]

__________________________________________________________________________________________________

block6d_project_conv (Conv2D) (None, None, None, 1 221184 block6d_se_excite[0][0]

__________________________________________________________________________________________________

block6d_project_bn (BatchNormal (None, None, None, 1 768 block6d_project_conv[0][0]

__________________________________________________________________________________________________

block6d_drop (Dropout) (None, None, None, 1 0 block6d_project_bn[0][0]

__________________________________________________________________________________________________

block6d_add (Add) (None, None, None, 1 0 block6d_drop[0][0]

block6c_add[0][0]

__________________________________________________________________________________________________

block7a_expand_conv (Conv2D) (None, None, None, 1 221184 block6d_add[0][0]

__________________________________________________________________________________________________

block7a_expand_bn (BatchNormali (None, None, None, 1 4608 block7a_expand_conv[0][0]

__________________________________________________________________________________________________

block7a_expand_activation (Acti (None, None, None, 1 0 block7a_expand_bn[0][0]

__________________________________________________________________________________________________

block7a_dwconv (DepthwiseConv2D (None, None, None, 1 10368 block7a_expand_activation[0][0]

__________________________________________________________________________________________________

block7a_bn (BatchNormalization) (None, None, None, 1 4608 block7a_dwconv[0][0]

__________________________________________________________________________________________________

block7a_activation (Activation) (None, None, None, 1 0 block7a_bn[0][0]

__________________________________________________________________________________________________

block7a_se_squeeze (GlobalAvera (None, 1152) 0 block7a_activation[0][0]

__________________________________________________________________________________________________

block7a_se_reshape (Reshape) (None, 1, 1, 1152) 0 block7a_se_squeeze[0][0]

__________________________________________________________________________________________________

block7a_se_reduce (Conv2D) (None, 1, 1, 48) 55344 block7a_se_reshape[0][0]

__________________________________________________________________________________________________

block7a_se_expand (Conv2D) (None, 1, 1, 1152) 56448 block7a_se_reduce[0][0]

__________________________________________________________________________________________________

block7a_se_excite (Multiply) (None, None, None, 1 0 block7a_activation[0][0]

block7a_se_expand[0][0]

__________________________________________________________________________________________________

block7a_project_conv (Conv2D) (None, None, None, 3 368640 block7a_se_excite[0][0]

__________________________________________________________________________________________________

block7a_project_bn (BatchNormal (None, None, None, 3 1280 block7a_project_conv[0][0]

__________________________________________________________________________________________________

top_conv (Conv2D) (None, None, None, 1 409600 block7a_project_bn[0][0]

__________________________________________________________________________________________________

top_bn (BatchNormalization) (None, None, None, 1 5120 top_conv[0][0]

__________________________________________________________________________________________________

top_activation (Activation) (None, None, None, 1 0 top_bn[0][0]

==================================================================================================

Total params: 4,049,571

Trainable params: 0

Non-trainable params: 4,049,571

__________________________________________________________________________________________________

Explore the whole Model¶

In [ ]:

Copied!

model.summary()

model.summary()

Model: "model_1" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= input_layer (InputLayer) [(None, 224, 224, 3)] 0 _________________________________________________________________ efficientnetb0 (Functional) (None, None, None, 1280) 4049571 _________________________________________________________________ global_avg_pooling_layer (Gl (None, 1280) 0 _________________________________________________________________ output_layer (Dense) (None, 10) 12810 ================================================================= Total params: 4,062,381 Trainable params: 12,810 Non-trainable params: 4,049,571 _________________________________________________________________

In [ ]:

Copied!

for layer in model.layers:

print(layer.name, layer.trainable)

for layer in model.layers:

print(layer.name, layer.trainable)

input_layer True efficientnetb0 False global_avg_pooling_layer True output_layer True

Exploring GlobalAveragePool2D¶

- Averaging accross inner dimensions

- Denoising the input

- Reducing the dimensionality

- Selecting the most important features (for each depth channel)

In [ ]:

Copied!

# Create a random tensor

input_shape = (1, 4, 4, 3)

input_tensor = tf.random.normal(input_shape)

print(f'Shape of the input tensor {input_tensor.shape}')

# Apply GlobalAveragePool2D

global_avg_pooled_tensor = layers.GlobalAveragePooling2D()(input_tensor)

print(f'Shape after GlobalAveragePooling2D: {global_avg_pooled_tensor.shape}')

# Create a random tensor

input_shape = (1, 4, 4, 3)

input_tensor = tf.random.normal(input_shape)

print(f'Shape of the input tensor {input_tensor.shape}')

# Apply GlobalAveragePool2D

global_avg_pooled_tensor = layers.GlobalAveragePooling2D()(input_tensor)

print(f'Shape after GlobalAveragePooling2D: {global_avg_pooled_tensor.shape}')

Shape of the input tensor (1, 4, 4, 3) Shape after GlobalAveragePooling2D: (1, 3)

In [ ]:

Copied!

# Same as GlobalAveragePooling2D

tf.reduce_mean(input_tensor, axis=[1, 2]).shape

# Same as GlobalAveragePooling2D

tf.reduce_mean(input_tensor, axis=[1, 2]).shape

Out[ ]:

TensorShape([1, 3])

Data Augmentation Layer¶

In [ ]:

Copied!

from tensorflow.keras.layers.experimental import preprocessing as KerasPreprocessing

from tensorflow.keras.layers.experimental import preprocessing as KerasPreprocessing

In [ ]:

Copied!

data_augmentation = tf.keras.models.Sequential([

KerasPreprocessing.RandomFlip('horizontal'),

KerasPreprocessing.RandomRotation(0.2),

KerasPreprocessing.RandomZoom(0.2),

KerasPreprocessing.RandomHeight(0.2),

KerasPreprocessing.RandomWidth(0.2),

KerasPreprocessing.Resizing(height=IMAGE_DIM[0], width=IMAGE_DIM[1]),

KerasPreprocessing.Rescaling(1/255.)

], name='data_augmentation')

data_augmentation = tf.keras.models.Sequential([

KerasPreprocessing.RandomFlip('horizontal'),

KerasPreprocessing.RandomRotation(0.2),

KerasPreprocessing.RandomZoom(0.2),

KerasPreprocessing.RandomHeight(0.2),

KerasPreprocessing.RandomWidth(0.2),

KerasPreprocessing.Resizing(height=IMAGE_DIM[0], width=IMAGE_DIM[1]),

KerasPreprocessing.Rescaling(1/255.)

], name='data_augmentation')

Compare normal vs augmentation image¶

In [ ]:

Copied!

batch = imgdir.load(1)

imgen = next(batch)

rand_image, label = np.squeeze(imgen.train_images), np.squeeze(imgen.train_labels)

aug_image = data_augmentation(tf.expand_dims(rand_image, axis=0))

fig, axn = plt.subplots(1, 2, figsize=(12, 4))

for ax, img, img_type in zip(axn, [rand_image, aug_image], ['Normal', 'Augmented']):

ax.imshow(np.squeeze(img))

ax.set_axis_off()

ax.set_title(img_type, fontdict=dict(size=20, weight='bold'))

batch = imgdir.load(1)

imgen = next(batch)

rand_image, label = np.squeeze(imgen.train_images), np.squeeze(imgen.train_labels)

aug_image = data_augmentation(tf.expand_dims(rand_image, axis=0))

fig, axn = plt.subplots(1, 2, figsize=(12, 4))

for ax, img, img_type in zip(axn, [rand_image, aug_image], ['Normal', 'Augmented']):

ax.imshow(np.squeeze(img))

ax.set_axis_off()

ax.set_title(img_type, fontdict=dict(size=20, weight='bold'))

Running a series of transfer learning experiments¶

Experiment with:

- Three different proportions of data:

1_percent,10_percent,100_percent - Two kinds of transfer learning:

Feature Extraction,Fine Tuning - Two models:

EfficientNetB0,ResNet50V2

In [ ]:

Copied!

from src.utils import create_tensorboard_callback

import os

from src.utils import create_tensorboard_callback

import os

In [ ]:

Copied!

class FoodClassificationExperiment:

DATA_DIR = {'1_percent': '../data/10_food_classes_1_percent',

'10_percent': '../data/10_food_classes_10_percent',

'100_percent': '../data/10_food_classes_all_data'}

IMAGE_DIM = (224, 224)

NUM_CHANNELS = 3

INPUT_SHAPE = (*IMAGE_DIM, NUM_CHANNELS)

BATCH_SIZE = 32

VALIDATION_SPLIT = 0.2

CLASSIFICATION_TYPE = 'categorical'

SCALING_FACTOR = 1/255.

SEED = 42

IMAGE_DATA_DIRECTORY = {data_pct: ClassicImageDataDirectory(data_dir, IMAGE_DIM, np.uint8) for data_pct, data_dir in DATA_DIR.items()}

BASE_EXPERIMENT = 'transfer_learning_experiments'

TASK = '10_food_multiclass_classification'

TFHUB_LOG_DIR = os.path.abspath('../tensorboard_logs/')

EXPERIMENT_COUNTER = 1

def __init__(self, data_pct, pretrained_model, data_augment=True, scale=True, name=None):

self.data_pct = data_pct

self.pretrained_model = tf.keras.models.clone_model(pretrained_model)

self.pretrained_model.set_weights(pretrained_model.get_weights())

self.data_augment = data_augment

self.scale = scale

self.data_dir = self.DATA_DIR[data_pct]

self.imgdir = self.IMAGE_DATA_DIRECTORY[data_pct]

self.mode = 'feature_extraction'

self.training_history = []

self._assign_name(name)

def _assign_name(self, name):

exp_id = self.EXPERIMENT_COUNTER

if name is None:

name = f'experiment_{exp_id}'

self.name = name

self.__class__.EXPERIMENT_COUNTER += 1

def preprocess_data(self):

self.train_datagen = ImageDataGenerator(validation_split=self.VALIDATION_SPLIT)

self.test_datagen = ImageDataGenerator()

def setup_directories(self):

self.train_dir = self.imgdir.train['dir']

self.test_dir = self.imgdir.test['dir']

def import_data_from_directories(self):

self.train_data = self.train_datagen.flow_from_directory(directory=self.train_dir, target_size=self.IMAGE_DIM,

batch_size=self.BATCH_SIZE, class_mode=self.CLASSIFICATION_TYPE,

subset='training', seed=self.SEED)

self.validation_data = self.train_datagen.flow_from_directory(directory=self.train_dir, target_size=self.IMAGE_DIM,

batch_size=self.BATCH_SIZE, class_mode=self.CLASSIFICATION_TYPE,

subset='validation', seed=self.SEED)

self.test_data = self.test_datagen.flow_from_directory(directory=self.test_dir, target_size=self.IMAGE_DIM,

batch_size=self.BATCH_SIZE, class_mode=self.CLASSIFICATION_TYPE,

shuffle=False)

def create_model(self):

inputs = layers.Input(shape=self.INPUT_SHAPE, name='input_layer')

if self.data_augment:

x = self._get_data_augment_layer()(inputs)

else:

x = inputs

if self.scale:

x = KerasPreprocessing.Rescaling(self.SCALING_FACTOR)(x)

self.pretrained_model.trainable = False

features = self.pretrained_model(x)

pooled = layers.GlobalAvgPool2D(name='global_avg_pooling_layer')(features)

outputs = layers.Dense(self.imgdir.n_classes, activation='softmax', name='output_layer')(pooled)

self.model = tf.keras.models.Model(inputs, outputs)

def compile_model(self):

self.model.compile(loss=losses.categorical_crossentropy, optimizer=optimizers.Adam(),

metrics=[KerasMetrics.f1, 'accuracy'])

def _get_data_augment_layer(self):

data_augment_layer = tf.keras.models.Sequential([

KerasPreprocessing.RandomFlip('horizontal'),

KerasPreprocessing.RandomRotation(0.2),

KerasPreprocessing.RandomZoom(0.2),

KerasPreprocessing.RandomHeight(0.2),

KerasPreprocessing.Resizing(height=self.IMAGE_DIM[0], width=self.IMAGE_DIM[1])

])

return data_augment_layer

def set_training_mode(self, mode, unfreeze_last_n=None):

if mode == 'feature_extraction':

assert unfreeze_last_n is None, 'This argument is only valid for mode == "fine_tuning"'

self.pretrained_model.trainable = False

elif mode == 'fine_tuning':

self.pretrained_model.trainable = True

for layer in self.pretrained_model.layers[:-unfreeze_last_n]:

layer.trainable = False

self.mode = mode

def run(self, epochs=10, tfhub_log=False):

if tfhub_log:

exp_name = os.path.join(self.BASE_EXPERIMENT, self.name)

model_callbacks = [create_tensorboard_callback(exp_name, self.TASK, self.TFHUB_LOG_DIR)]

else:

model_callbacks = []

try:

initial_epoch = self.model.history.epoch[-1]

except (IndexError, AttributeError):

initial_epoch = 0

self.model.fit(self.train_data, steps_per_epoch=len(self.train_data),

validation_data=self.validation_data, validation_steps=len(self.validation_data),

epochs=epochs+initial_epoch, initial_epoch=initial_epoch, callbacks=model_callbacks)

history_dict = self.model.history.history.copy()

history_dict['epoch'] = self.model.history.epoch

self.training_history.append((self.mode, history_dict))

def plot_learning_curve(self, metric='loss'):

fig, ax = plt.subplots()

max_epoch = self.training_history[-1][1]['epoch'][-1]

for i, (mode, history_dict) in enumerate(exp.training_history):

ax.plot(history_dict['epoch'], history_dict[metric], color='blue', label=metric)

ax.plot(history_dict['epoch'], history_dict[f'val_{metric}'], color='orange', label=f'val_{metric}')

epochs = history_dict['epoch']

mid_epoch = (epochs[0] + epochs[-1])/2 - 0.5

ax.text(mid_epoch/(max_epoch+1), 0.5, mode, transform=ax.transAxes)

if i == 0:

ax.set_xlim(0, max_epoch)

else:

ax.axvline(x=history_dict['epoch'][0], color='green')

ax.set_xlabel('epoch')

ax.set_ylabel(metric)

return ax

class FoodClassificationExperiment:

DATA_DIR = {'1_percent': '../data/10_food_classes_1_percent',

'10_percent': '../data/10_food_classes_10_percent',

'100_percent': '../data/10_food_classes_all_data'}

IMAGE_DIM = (224, 224)

NUM_CHANNELS = 3

INPUT_SHAPE = (*IMAGE_DIM, NUM_CHANNELS)

BATCH_SIZE = 32

VALIDATION_SPLIT = 0.2

CLASSIFICATION_TYPE = 'categorical'

SCALING_FACTOR = 1/255.

SEED = 42

IMAGE_DATA_DIRECTORY = {data_pct: ClassicImageDataDirectory(data_dir, IMAGE_DIM, np.uint8) for data_pct, data_dir in DATA_DIR.items()}

BASE_EXPERIMENT = 'transfer_learning_experiments'

TASK = '10_food_multiclass_classification'

TFHUB_LOG_DIR = os.path.abspath('../tensorboard_logs/')

EXPERIMENT_COUNTER = 1

def __init__(self, data_pct, pretrained_model, data_augment=True, scale=True, name=None):

self.data_pct = data_pct

self.pretrained_model = tf.keras.models.clone_model(pretrained_model)

self.pretrained_model.set_weights(pretrained_model.get_weights())

self.data_augment = data_augment

self.scale = scale

self.data_dir = self.DATA_DIR[data_pct]

self.imgdir = self.IMAGE_DATA_DIRECTORY[data_pct]

self.mode = 'feature_extraction'

self.training_history = []

self._assign_name(name)

def _assign_name(self, name):

exp_id = self.EXPERIMENT_COUNTER

if name is None:

name = f'experiment_{exp_id}'

self.name = name

self.__class__.EXPERIMENT_COUNTER += 1

def preprocess_data(self):

self.train_datagen = ImageDataGenerator(validation_split=self.VALIDATION_SPLIT)

self.test_datagen = ImageDataGenerator()

def setup_directories(self):

self.train_dir = self.imgdir.train['dir']

self.test_dir = self.imgdir.test['dir']

def import_data_from_directories(self):

self.train_data = self.train_datagen.flow_from_directory(directory=self.train_dir, target_size=self.IMAGE_DIM,

batch_size=self.BATCH_SIZE, class_mode=self.CLASSIFICATION_TYPE,

subset='training', seed=self.SEED)

self.validation_data = self.train_datagen.flow_from_directory(directory=self.train_dir, target_size=self.IMAGE_DIM,

batch_size=self.BATCH_SIZE, class_mode=self.CLASSIFICATION_TYPE,

subset='validation', seed=self.SEED)

self.test_data = self.test_datagen.flow_from_directory(directory=self.test_dir, target_size=self.IMAGE_DIM,

batch_size=self.BATCH_SIZE, class_mode=self.CLASSIFICATION_TYPE,

shuffle=False)

def create_model(self):

inputs = layers.Input(shape=self.INPUT_SHAPE, name='input_layer')

if self.data_augment:

x = self._get_data_augment_layer()(inputs)

else:

x = inputs

if self.scale: